前面我们在VMware vSphere虚拟化环境中部署了Oracle11G RAC、今天我们再一起来看看如何在VMware vSphere虚拟化环境中部署Oracle19C RAC。

1、基础环境

1.1、安装环境

宿主机操作系统:VMware vSphere 5.5

RAC节点操作系统:CentOS Linux release 7.6.1810 (Core)

Oracle DateBase Software:Oracle19C 19.3

Cluster Software:Oracle Grid Infrastructure 19C

共享存储:ASM

1.2、网络规划

| 网络配置 | 节点1 | 节点2 | 备注 |

|---|---|---|---|

| 主机名称 | oracle19crac01 | oracle19crac02 | |

| public ip | 172.16.200.21 | 172.16.200.22 | |

| private ip | 192.168.0.21 | 192.168.0.22 | |

| vip | 172.16.200.23 | 172.16.200.24 | |

| scan ip | 172.16.200.25 | 172.16.200.25 |

1.3、ASM 磁盘组规划

| ASM磁盘组 | 用途 | 磁盘名称 | 大小 | ASM冗余 |

|---|---|---|---|---|

| SYSDG | ORC Vote 19C Grid | SYSDG_01_5G,SYSDG_02_5G,SYSDG_03_5G | 5G+5G+5G | NORMAL |

| DATADG | 数据文件 | DATA_01_50G,DATA_02_50G | 50G+50G | EXTERNAL |

| FRADG | 闪回,归档,备份 | FRADG_01_10G,FRADG_02_10G | 10G+10G | EXTERNAL |

1.4、Oracle组件

| 组件名称 | Grid | Oracle |

|---|---|---|

| 所属用户 | grid | oracle |

| 所属辅助组 | asmadmin,asmdba,asmoper,dba grid | dba,asmdba,backupdba,dgdba,kmdba,racdba,oper oracle |

| 主目录 | /home/grid | /home/oracle |

| Oracle基础目录 | /u01/app/grid | /u01/app/oracle |

| Oracle主目录 | /u01/app/19.0.0/grid | /u01/app/oracle/product/19.0.0/dbhome_1 |

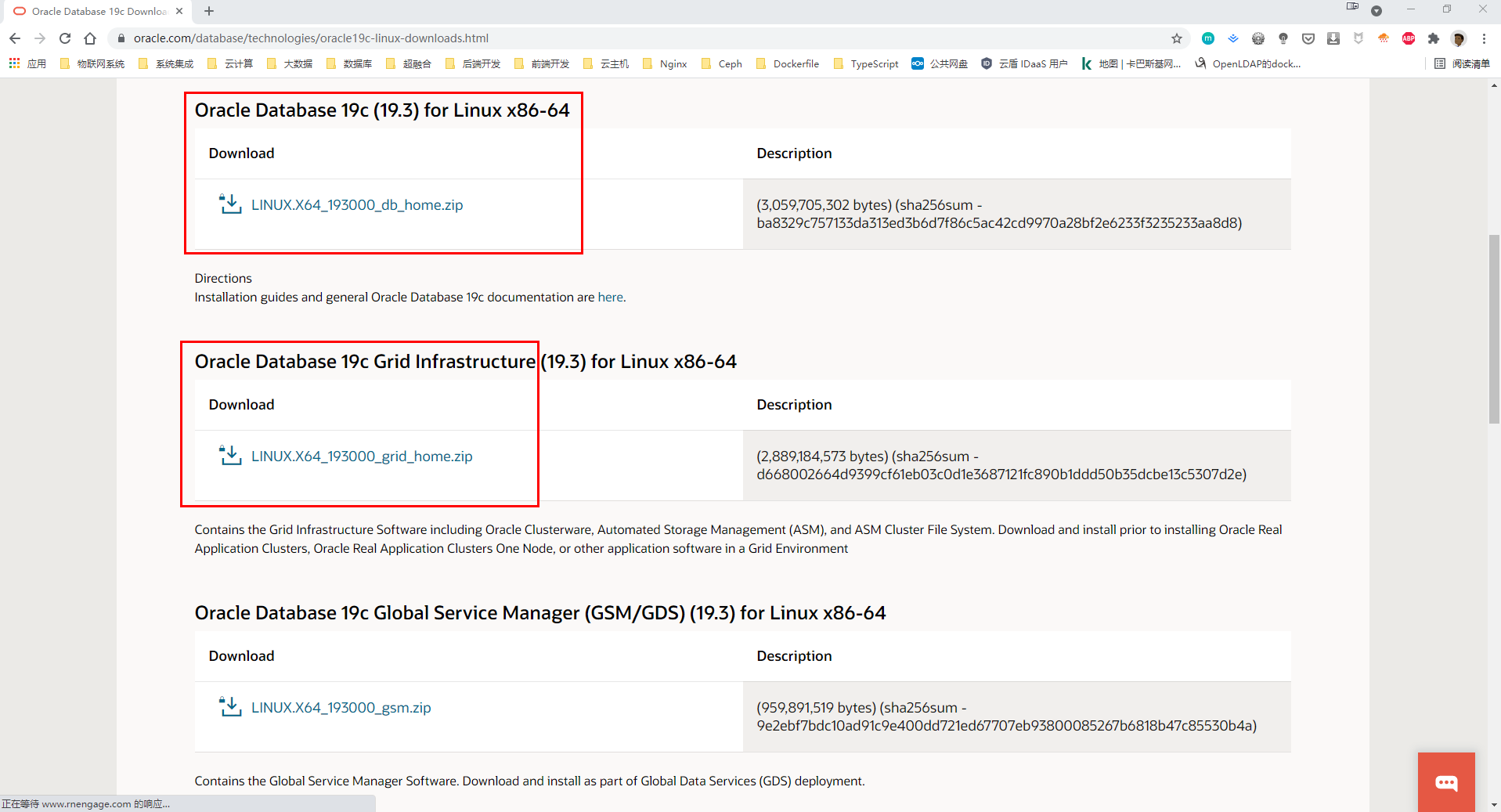

1.5、Oracle19C安装包

各位小伙伴可以自行登录Oracle官方网站自行下载最新的19C安装包:LINUX.X64_193000_db_home.zip 和 LINUX.X64_193000_grid_home.zip

2、准备操作系统

注:创建虚拟机以及安装操作系统的过程这里就不再详细介绍了、请各位小伙伴自行百度。

2.1、配置主机名和hosts

# 可以将下面的节点名称替换为自己的主机名称

hostnamectl set-hostname oracle19crac01

hostnamectl set-hostname oracle19crac02

# 配置hosts文件

cat >> /etc/hosts <<EOF

# Public ip ens160

172.16.200.21 oracle19crac01

172.16.200.22 oracle19crac02

# Private ip ens192

192.168.0.21 oracle19crac01-priv

192.168.0.22 oracle19crac02-priv

# VIP ens160

172.16.200.23 oracle19crac01-vip

172.16.200.24 oracle19crac02-vip

# Scan ip ens160

172.16.200.25 oracle19crac-scan

EOF

注:这里我们没有配置DNS,如果 DNS 不支持主机名称解析,我们就需要在每台机器的 /etc/hosts 文件中添加主机名和 IP 的对应关系;然后退出,重新登录 root 账号,可以看到主机名生效;要特别强调一下,hostname尽量采用小写。

2.2、关闭防火墙

[root@oracle19crac01 ~]# systemctl stop firewalld && systemctl disable firewalld

[root@oracle19crac01 ~]# systemctl status firewalld

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled)

Active: inactive (dead)

Docs: man:firewalld(1)

[root@oracle19crac01 ~]#

2.3、关闭SELinux

cat << EOF > /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disable

# SELINUXTYPE= can take one of three values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

EOF

[root@oracle19crac01 ~]# setenforce 0

[root@oracle19crac01 ~]# cat /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disable

# SELINUXTYPE= can take one of three values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

[root@oracle19crac01 ~]#

2.4、安装依赖包

yum install -y binutils compat-libcap1 compat-libstdc++-33 compat-libstdc++-33.i686 gcc gcc-c++ glibc glibc.i686 glibc-devel glibc-devel.i686 ksh libgcc libgcc.i686 libstdc++ libstdc++.i686 libstdc++-devel libstdc++-devel.i686 libaio libaio.i686 libaio-devel libaio-devel.i686 libXext libXext.i686 libXtst libXtst.i686 libX11 libX11.i686 libXau libXau.i686 libxcb libxcb.i686 libXi libXi.i686 make sysstat unixODBC unixODBC-devel readline libtermcap-devel bc compat-libstdc++ elfutils-libelf elfutils-libelf-devel fontconfig-devel libXi libXtst libXrender libXrender-devel libgcc librdmacm-devel libstdc++ libstdc++-devel net-tools nfs-utils python python-configshell python-rtslib python-six targetcli smartmontools xorg-x11-xauth xterm unzip

注:rhel7还需单独安装一个独立包rpm -ivh compat-libstdc++-33-3.2.3-72.el7.x86_64.rpm

2.5、禁用NTP

注:这里我们是采用最小化的方式安装了CentOS7.6、安装的过程中默认没有安装NTP。

[root@oracle19crac01 ~]# systemctl stop chronyd.service

[root@oracle19crac01 ~]# systemctl disable chronyd.service

Removed symlink /etc/systemd/system/multi-user.target.wants/chronyd.service.

[root@oracle19crac01 ~]# rm -rf /etc/chrony.conf

[root@oracle19crac01 ~]# systemctl status ntpd

Unit ntpd.service could not be found.

[root@oracle19crac01 ~]# systemctl disable ntpd.service

Failed to execute operation: No such file or directory

[root@oracle19crac01 ~]# systemctl stop ntpd.service

Failed to stop ntpd.service: Unit ntpd.service not loaded.

[root@oracle19crac01 ~]# mv /etc/ntp.conf /etc/ntp.conf.bak

mv: cannot stat ‘/etc/ntp.conf’: No such file or directory

[root@oracle19crac01 ~]#

[root@oracle19crac01 ~]# timedatectl set-timezone Asia/Shanghai

[root@oracle19crac01 ~]# timedatectl list-timezones | grep Shanghai

Asia/Shanghai

[root@oracle19crac01 ~]#

2.6、配置本地Yum源(补充)

mkdir -p /mnt/cdrom

mount /dev/cdrom /mnt/cdrom

cd /etc/yum.repos.d/

rename .repo .repo.bak *

touch /etc/yum.repos.d/CentOS-Media.repo

cat <<EOF >/etc/yum.repos.d/CentOS-Media.repo

[local]

name=local

baseurl=file:///mnt/cdrom

gpgcheck=0

enabled=1

EOF

注:有需要的小伙伴可以自定添加本地Yum源安装相关依赖包。

2.7、添加shm

默认的Linux发行版中的内核配置都会开启tmpfs,映射到了/dev/下的shm目录。可以通过df 命令查看结果/dev/shm/是linux下一个非常有用的目录,因为这个目录不在硬盘上,而是在内存里。因此在linux下,就不需要大费周折去建ramdisk,直接使用/dev/shm/就可达到很好的优化效果。默认系统就会加载/dev/shm ,它就是所谓的tmpfs,有人说跟ramdisk(虚拟磁盘),但不一样。象虚拟磁盘一样,tmpfs 可以使用您的 RAM,但它也可以使用您的交换分区来存储。而且传统的虚拟磁盘是个块设备,并需要一个 mkfs 之类的命令才能真正地使用它,tmpfs 是一个文件系统,而不是块设备;您只是安装它,它就可以使用了。

[root@oracle19crac01 ~]# vi /etc/fstab

tmpfs /dev/shm tmpfs defaults,size=10g 0 0

# 添加完上面的命令之后执行mount -o remount /dev/shm

[root@oracle19crac01 ~]# mount -o remount /dev/shm

[root@oracle19crac01 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/centos-root 44G 16G 29G 36% /

devtmpfs 3.9G 0 3.9G 0% /dev

tmpfs 10G 0 10G 0% /dev/shm

tmpfs 3.9G 8.9M 3.9G 1% /run

tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup

/dev/sda1 1014M 146M 869M 15% /boot

tmpfs 783M 0 783M 0% /run/user/0

[root@oracle19crac01 ~]#

3、配置CentOS系统

3.1、创建用户和组

groupadd -g 54321 oinstall

groupadd -g 54322 dba

groupadd -g 54323 oper

groupadd -g 54324 backupdba

groupadd -g 54325 dgdba

groupadd -g 54326 kmdba

groupadd -g 54327 asmdba

groupadd -g 54328 asmoper

groupadd -g 54329 asmadmin

groupadd -g 54330 racdba

useradd -u 54321 -g oinstall -G dba,asmdba,backupdba,dgdba,kmdba,racdba,oper oracle

useradd -u 54322 -g oinstall -G asmadmin,asmdba,asmoper,dba grid

# 配置oracle和grid的用户密码

[root@oracle19crac01 ~]# echo "oracle" | passwd --stdin oracle

Changing password for user oracle.

passwd: all authentication tokens updated successfully.

[root@oracle19crac01 ~]# echo "grid" | passwd --stdin grid

Changing password for user grid.

passwd: all authentication tokens updated successfully.

[root@oracle19crac01 ~]#

3.2、创建文件目录

mkdir -p /u01/app/19.0.0/grid

mkdir -p /u01/app/grid

mkdir -p /u01/app/oracle/product/19.0.0/dbhome_1

chown -R grid:oinstall /u01

chown -R oracle:oinstall /u01/app/oracle

chmod -R 775 /u01/

3.3、配置CentOS最大进程数

cat << EOF > /etc/security/limits.d/20-nproc.conf

# Default limit for number of user's processes to prevent

# accidental fork bombs.

# See rhbz #432903 for reasoning.

# * soft nproc 4096

root soft nproc unlimited

* - nproc 16384

EOF

3.4、限制用户进程数

cat >> /etc/security/limits.conf <<EOF

# ORACLE SETTING

grid soft nproc 16384

grid hard nproc 16384

grid soft nofile 1024

grid hard nofile 65536

grid soft stack 10240

grid hard stack 10240

oracle soft nproc 16384

oracle hard nproc 16384

oracle soft nofile 1024

oracle hard nofile 65536

oracle soft stack 10240

oracle hard stack 32768

oracle soft memlock 6291456

oracle hard memlock 6291456

EOF

# memlock 这个值应该比内存配置略小,也就是要配置的足够大。单位:k

# 4194304 表示4G 8388608表示8G

3.5、限制用户登录状态

cat >> /etc/pam.d/login <<EOF

# ORACLE SETTING

session required pam_limits.so

EOF

3.6、修改内核参数

cat >> /etc/sysctl.conf <<EOF

#### fs setting

fs.aio-max-nr = 4194304

fs.file-max = 6815744

#### kernel setting kernel.shmall和kernel.shmmax设置过大会导致dbca时报错,两个节点实例不能启动,如果出现相同问题,请按照官网要求对这两个参数进行设置

## 是全部允许使用的共享内存大小 可以设置shmmax/4096

kernel.shmall = 1415577

## 是单个段允许使用的大小,可以设置内存的90%,例如6G内存设置为

## 6*1024*1024*1024*90%

kernel.shmmax = 5798205849

kernel.shmmni = 4096

kernel.sem = 250 32000 100 128

kernel.panic_on_oops = 1

kernel.panic = 10

#### Net Setting

net.ipv4.ip_local_port_range = 9000 65500

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 4194304

## TCP Cache Setting

net.ipv4.tcp_moderate_rcvbuf=1

net.ipv4.tcp_rmem = 4096 87380 4194304

net.ipv4.tcp_wmem = 4096 16384 4194304

### 把eth2,eth1,eth0设置为本机对应的网卡,如果两个网卡只设置eth0,eth1

net.ipv4.conf.ens160.rp_filter = 2

net.ipv4.conf.ens192.rp_filter = 2

#### Memory Setting

vm.vfs_cache_pressure=200

vm.swappiness=10

vm.min_free_kbytes=102400

## 生产环境虚拟机建议关闭大页设置,不然会报系统资源不足,连接不上数据库,此处我并没有设置此参数

# vm.nr_hugepages=10

EOF

# 执行下面的语句生效

/sbin/sysctl -p

3.7、禁用avahi-daemon

注:最小化安装没有此服务。

systemctl disable avahi-daemon.socket && systemctl disable avahi-daemon.service

ps -ef | grep avahi-daemon

kill -9 pid avahi-daemon

3.8、禁用动态配置协议

cat >> /etc/sysconfig/network <<EOF

NOZEROCONF=yes

EOF

注:ZEROCONF又被叫做 IPv4 Link-Local (IPv4LL)和 Automatic Private IP Addressing (APIPA)。它是一个动态配置协议,系统可以通过它来连接到网络。很多Linux发行版都默认安装该服务,当系统无法连接DHCP server的时候,就会尝试通过ZEROCONF来获取IP。我们可以通过添加NOZEROCONF=yes参数来禁用它。

3.9、配置grid用户环境变量

cat >>/home/grid/.bash_profile <<EOF

EDITOR=vi

export EDITOR

export PS1=\`whoami\`@\`hostname\`\['\$ORACLE_SID'\]'\$PWD\$'

# 节点二请修改为+ASM2

ORACLE_SID=+ASM1

export ORACLE_SID

ORACLE_BASE=/u01/app/grid

export ORACLE_BASE

ORACLE_HOME=/u01/app/19.0.0/grid

export ORACLE_HOME

ORACLE_PATH=/u01/app/oracle/common/oracle/sql

export ORACLE_PATH

ORACLE_TERM=xterm

export ORACLE_TERM

NLS_DATE_FORMAT="DD-MON-YYYY HH24:MI:SS"

export NLS_DATE_FORMAT

export NLS_LANG=AMERICAN_AMERICA.zhs16gbk

TNS_ADMIN=\$ORACLE_HOME/network/admin

export TNS_ADMIN

ORA_NLS11=\$ORACLE_HOME/nls/data

export ORA_NLS11

PATH=.:\${JAVA_HOME}/bin:\${PATH}:\$HOME/bin:\$ORACLE_HOME/bin:\$ORACLE_HOME/OPatch

PATH=\${PATH}:/usr/bin:/bin:/usr/bin/X11:/usr/local/bin

PATH=\${PATH}:/u01/app/common/oracle/bin

export PATH

LD_LIBRARY_PATH=\$ORACLE_HOME/lib

LD_LIBRARY_PATH=\${LD_LIBRARY_PATH}:\$ORACLE_HOME/oracm/lib

LD_LIBRARY_PATH=\${LD_LIBRARY_PATH}:/lib:/usr/lib:/usr/local/lib

export LD_LIBRARY_PATH

CALSSPATH=\$ORACLE_HOME/JRE

CALSSPATH=\${CALSSPATH}:\$ORACLE_HOME/jlib

CALSSPATH=\${CALSSPATH}:\$ORACLE_HOME/rdbms/jlib

CALSSPATH=\${CALSSPATH}:\$ORACLE_HOME/network/jlib

export CLASSPATH

THREADS_FLAG=native;export THREADS_FLAG

export TEMP=/tmp

export TMPDIR=/tmp

umask 022

echo ' '

echo '\$ORACLE_SID: '\$ORACLE_SID

echo '\$ORACLE_HOME: '\$ORACLE_HOME

echo ' '

EOF

3.10、配置oracle用户环境变量

cat >>/home/oracle/.bash_profile <<EOF

EDITOR=vi

export EDITOR

export PS1=\`whoami\`@\`hostname\`\['\$ORACLE_SID'\]'\$PWD\$'

# 节点二修改为racdb2

ORACLE_SID=racdb1

export ORACLE_SID

ORACLE_UNQNAME=racdb

export ORACLE_UNQNAME

JAVA_HOME=/usr/local/java

export JAVA_HOME

ORACLE_BASE=/u01/app/oracle

export ORACLE_BASE

ORACLE_HOME=\$ORACLE_BASE/product/19.0.0/dbhome_1

export ORACLE_HOME

ORACLE_PATH=/u01/app/commom/oracle/sql

export ORACLE_PATH

ORACLE_TERM=xterm

export ORACLE_TERM

NLS_DATE_FORMAT="DD-MON-YYYY HH24:MI:SS"

export NLS_DATE_FORMAT

TNS_ADMIN=\$ORACLE_HOME/network/admin

export TNS_ADMIN

ORA_NLS11=\$ORACLE_HOME/nls/data

export ORA_NLS11

PATH=.:\${JAVA_HOME}/bin:\${PATH}:\$HOME/bin:\$ORACLE_HOME/bin:\$ORACLE_HOME/OPatch

PATH=\${PATH}:/usr/bin:/bin:/usr/bin/X11:/usr/local/bin

PATH=\${PATH}:/u01/app/common/oracle/bin

export PATH

LD_LIBRARY_PATH=\$ORACLE_HOME/lib

LD_LIBRARY_PATH=\${LD_LIBRARY_PATH}:\$ORACLE_HOME/oracm/lib

LD_LIBRARY_PATH=\${LD_LIBRARY_PATH}:/lib:/usr/lib:/usr/local/lib

export LD_LIBRARY_PATH

CALSSPATH=\$ORACLE_HOME/JRE

CALSSPATH=\${CALSSPATH}:\$ORACLE_HOME/jlib

CALSSPATH=\${CALSSPATH}:\$ORACLE_HOME/rdbms/jlib

CALSSPATH=\${CALSSPATH}:\$ORACLE_HOME/network/jlib

export CLASSPATH

THREADS_FLAG=native;export THREADS_FLAG

export TEMP=/tmp

export TMPDIR=/tmp

umask 022

export ORACLE_OWNER=oracle

export NLS_LANG=AMERICAN_AMERICA.zhs16gbk

echo ' '

echo '\$ORACLE_SID: '\$ORACLE_SID

echo '\$ORACLE_HOME: '\$ORACLE_HOME

echo ' '

EOF

3.11、配置root用户环境变量

注:加入grid用户$ORACLE_HOME

cat << EOF > ~/.bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

PATH=$PATH:$HOME/bin

export ORACLE_HOME=/u01/app/19.0.0/grid

PATH=$PATH:$HOME/bin:$ORACLE_HOME/bin

export PATH

EOF

3.12、禁用透明大页

在 Linux 中大页分为两种: Huge pages ( 标准大页 ) 和 Transparent Huge pages( 透明大页 ) 。内存是以块即页的方式进行管理的,当前大部分系统默认的页大小为 4096 bytes 即 4K 。 1MB 内存等于 256 页; 1GB 内存等于 256000 页。CPU 拥有内置的内存管理单元,包含这些页面的列表,每个页面通过页表条目引用。当内存越来越大的时候, CPU 需要管理这些内存页的成本也就越高,这样会对操作系统的性能产生影响。

Huge Pages:Huge pages 是从 Linux Kernel 2.6 后被引入的,目的是通过使用大页内存来取代传统的 4kb 内存页面, 以适应越来越大的系统内存,让操作系统可以支持现代硬件架构的大页面容量功能。Huge pages 有两种格式大小: 2MB 和 1GB , 2MB 页块大小适合用于 GB 大小的内存, 1GB 页块大小适合用于 TB 级别的内存; 2MB 是默认的页大小。

Transparent Huge Pages:Transparent Huge Pages 缩写 THP ,这个是 RHEL 6 开始引入的一个功能,在 Linux6 上透明大页是默认启用的。由于 Huge pages 很难手动管理,而且通常需要对代码进行重大的更改才能有效的使用,因此 RHEL 6 开始引入了 Transparent Huge Pages ( THP ), THP 是一个抽象层,能够自动创建、管理和使用传统大页。THP 为系统管理员和开发人员减少了很多使用传统大页的复杂性 , 因为 THP 的目标是改进性能 , 因此其它开发人员 ( 来自社区和红帽 ) 已在各种系统、配置、应用程序和负载中对 THP 进行了测试和优化。这样可让 THP 的默认设置改进大多数系统配置性能。但是 , 不建议对数据库工作负载使用 THP 。

这两者最大的区别在于 : 标准大页管理是预分配的方式,而透明大页管理则是动态分配的方式。

[root@oracle19crac01 ~]# echo never > /sys/kernel/mm/transparent_hugepage/enabled

[root@oracle19crac01 ~]# echo never > /sys/kernel/mm/transparent_hugepage/defrag

[root@oracle19crac01 ~]# cat /sys/kernel/mm/transparent_hugepage/defrag

always madvise [never]

[root@oracle19crac01 ~]# cat /sys/kernel/mm/transparent_hugepage/enabled

always madvise [never]

[root@oracle19crac01 ~]#

# 验证

[root@oracle19crac01 ~]# grep Huge /proc/meminfo

AnonHugePages: 8192 kB

HugePages_Total: 0

HugePages_Free: 0

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 2048 kB

[root@oracle19crac01 ~]#

# 注:HugePages_Total:显示0 表示设置成功

# 如果不成功可以重启、重启之后还是不行可以继续执行下面的命令来禁用透明大页

[root@oracle19crac01 ~]# echo 'echo never > /sys/kernel/mm/transparent_hugepage/defrag' >> /etc/rc.d/rc.local

[root@oracle19crac01 ~]# echo 'echo never > /sys/kernel/mm/transparent_hugepage/enabled' >> /etc/rc.d/rc.local

[root@oracle19crac01 ~]# grep Huge /proc/meminfo

AnonHugePages: 8192 kB

HugePages_Total: 0

HugePages_Free: 0

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 2048 kB

[root@oracle19crac01 ~]#

3.13、关闭THP及NUMA

对于数据库应用,不推荐使用 THP;主要的原因是这类数据库大部分访问内存的方式是分散的,并不是访问连续的页面,而这样的访问模式,就会造成内存的碎片化。访问的page 不也不是大量连续性的,并且在不启用THP 时申请4KB的内存时。LINUX会分配相应的内存给应用,但如果是在系统级别启用了THP,则类似数据库申请内存时,即使申请的值是4KB。但分配是会以大于4KB例如 2MB 来进行分配,这样数据库申请使用内存的方式也会出现问题和相关的损耗。

cat << EOF > /etc/selinux/config

GRUB_TIMEOUT=5

GRUB_DISTRIBUTOR="$(sed 's, release .*$,,g' /etc/system-release)"

GRUB_DEFAULT=saved

GRUB_DISABLE_SUBMENU=true

GRUB_TERMINAL_OUTPUT="console"

GRUB_CMDLINE_LINUX="rd.lvm.lv=rhel/root rd.lvm.lv=rhel/swap biosdevname=0 net.ifnames=0 rhgb quiet transparent_hugepage=never numa=off"

GRUB_DISABLE_RECOVERY="true"

EOF

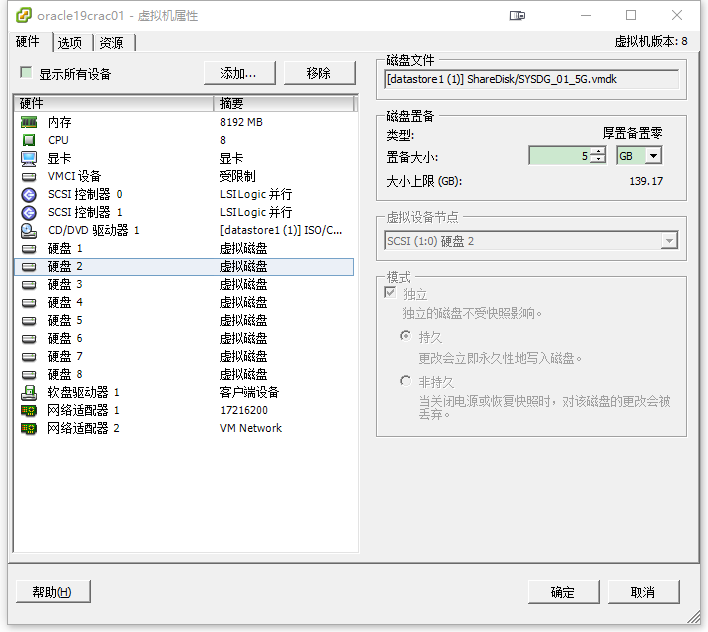

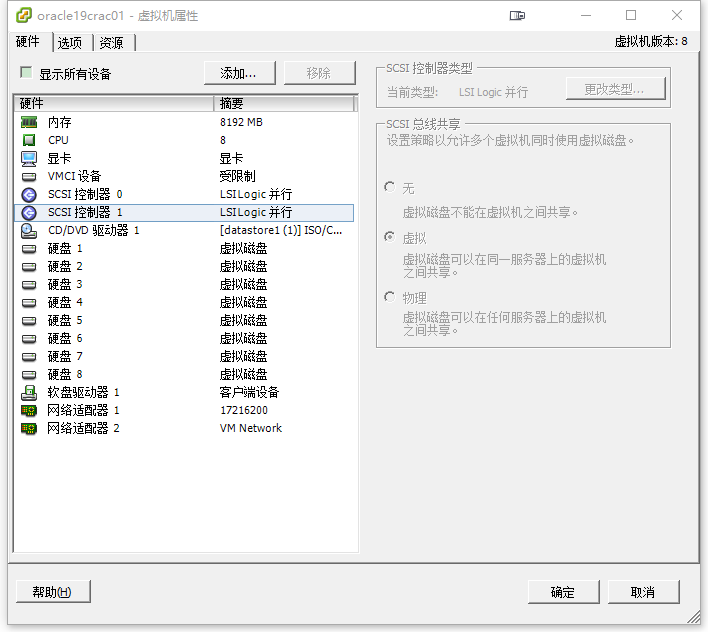

4、添加共享存储

这里我们使用下面的命令分别创建共享磁盘如下:

# 通过SSH命登录到vSphere主机上、用下面的命令创建共享磁盘

vmkfstools -c 5120m -a lsilogic -d eagerzeroedthick /vmfs/volumes/55daf3f3-76fc5ed1-2985-7ca23e8d333c/ShareDisk/SYSDG_01_5G.vmdk

vmkfstools -c 5120m -a lsilogic -d eagerzeroedthick /vmfs/volumes/55daf3f3-76fc5ed1-2985-7ca23e8d333c/ShareDisk/SYSDG_02_5G.vmdk

vmkfstools -c 5120m -a lsilogic -d eagerzeroedthick /vmfs/volumes/55daf3f3-76fc5ed1-2985-7ca23e8d333c/ShareDisk/SYSDG_03_5G.vmdk

vmkfstools -c 51200m -a lsilogic -d eagerzeroedthick /vmfs/volumes/55daf3f3-76fc5ed1-2985-7ca23e8d333c/ShareDisk/DATA_01_50G.vmdk

vmkfstools -c 51200m -a lsilogic -d eagerzeroedthick /vmfs/volumes/55daf3f3-76fc5ed1-2985-7ca23e8d333c/ShareDisk/DATA_02_50G.vmdk

vmkfstools -c 10240m -a lsilogic -d eagerzeroedthick /vmfs/volumes/55daf3f3-76fc5ed1-2985-7ca23e8d333c/ShareDisk/FRADG_01_10G.vmdk

vmkfstools -c 10240m -a lsilogic -d eagerzeroedthick /vmfs/volumes/55daf3f3-76fc5ed1-2985-7ca23e8d333c/ShareDisk/FRADG_02_10G.vmdk

磁盘创建完成之后我们添加到两台主机上(这里有必要说明一下新添加的SCSI控制器1–SCSI总线共享必须选择为虚拟或者物理模式,不然主机会启动失败):

4.1、初始化磁盘

[root@oracle19crac01 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

fd0 2:0 1 4K 0 disk

sda 8:0 0 50G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 49G 0 part

├─centos-root 253:0 0 44G 0 lvm /

└─centos-swap 253:1 0 5G 0 lvm [SWAP]

sdb 8:16 0 5G 0 disk

sdc 8:32 0 5G 0 disk

sdd 8:48 0 5G 0 disk

sde 8:64 0 10G 0 disk

sdf 8:80 0 10G 0 disk

sdg 8:96 0 50G 0 disk

sdh 8:112 0 50G 0 disk

sr0 11:0 1 4.3G 0 rom

[root@oracle19crac01 ~]#

# 执行下面的命令进行磁盘初始化(两个节点都要执行)

echo -e "n\np\n1\n\n\nw" | fdisk /dev/sdb

echo -e "n\np\n1\n\n\nw" | fdisk /dev/sdc

echo -e "n\np\n1\n\n\nw" | fdisk /dev/sdd

echo -e "n\np\n1\n\n\nw" | fdisk /dev/sde

echo -e "n\np\n1\n\n\nw" | fdisk /dev/sdf

echo -e "n\np\n1\n\n\nw" | fdisk /dev/sdg

echo -e "n\np\n1\n\n\nw" | fdisk /dev/sdh

[root@oracle19crac01 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

fd0 2:0 1 4K 0 disk

sda 8:0 0 50G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 49G 0 part

├─centos-root 253:0 0 44G 0 lvm /

└─centos-swap 253:1 0 5G 0 lvm [SWAP]

sdb 8:16 0 5G 0 disk

└─sdb1 8:17 0 5G 0 part

sdc 8:32 0 5G 0 disk

└─sdc1 8:33 0 5G 0 part

sdd 8:48 0 5G 0 disk

└─sdd1 8:49 0 5G 0 part

sde 8:64 0 10G 0 disk

└─sde1 8:65 0 10G 0 part

sdf 8:80 0 10G 0 disk

└─sdf1 8:81 0 10G 0 part

sdg 8:96 0 50G 0 disk

└─sdg1 8:97 0 50G 0 part

sdh 8:112 0 50G 0 disk

└─sdh1 8:113 0 50G 0 part

sr0 11:0 1 4.3G 0 rom

[root@oracle19crac01 ~]#

[root@oracle19crac02 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

fd0 2:0 1 4K 0 disk

sda 8:0 0 50G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 49G 0 part

├─centos-root 253:0 0 44G 0 lvm /

└─centos-swap 253:1 0 5G 0 lvm [SWAP]

sdb 8:16 0 5G 0 disk

└─sdb1 8:17 0 5G 0 part

sdc 8:32 0 5G 0 disk

└─sdc1 8:33 0 5G 0 part

sdd 8:48 0 5G 0 disk

└─sdd1 8:49 0 5G 0 part

sde 8:64 0 10G 0 disk

└─sde1 8:65 0 10G 0 part

sdf 8:80 0 10G 0 disk

└─sdf1 8:81 0 10G 0 part

sdg 8:96 0 50G 0 disk

└─sdg1 8:97 0 50G 0 part

sdh 8:112 0 50G 0 disk

└─sdh1 8:113 0 50G 0 part

sr0 11:0 1 4.3G 0 rom

[root@oracle19crac02 ~]#

4.2、配置udev映射

这里我们通过udev来映射生成rules文件(主要是为了获取UUID):

cat << EOF > /etc/selinux/config

/etc/scsi_id.config

EOF

for i in b c d e f g h;

do

echo "KERNEL==\"sd?1\", SUBSYSTEM==\"block\", PROGRAM==\"/usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/\$parent\", RESULT==\"`/usr/lib/udev/scsi_id -g -u -d /dev/sd$i`\",SYMLINK+=\"asmdisks/asmdisk0$i\",OWNER=\"grid\", GROUP=\"asmadmin\", MODE=\"0660\"" >>/etc/udev/rules.d/99-oracle-asmdevices.rules

done

# 生成完的内容如下、这里我们需要根据磁盘数量来修改 for i in b c d e f g h;

[root@oracle19crac01 ~]# for i in b c d e f g h;

> do

> echo "KERNEL==\"sd?1\", SUBSYSTEM==\"block\", PROGRAM==\"/usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/\$parent\", RESULT==\"`/usr/lib/udev/scsi_id -g -u -d /dev/sd$i`\",SYMLINK+=\"asmdisks/asmdisk0$i\",OWNER=\"grid\", GROUP=\"asmadmin\", MODE=\"0660\"" >>/etc/udev/rules.d/99-oracle-asmdevices.rules

> done

[root@oracle19crac01 ~]# cat /etc/udev/rules.d/99-oracle-asmdevices.rules

KERNEL=="sd?1", SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$parent", RESULT=="36000c29b358c641dccecac78d76a7cc3",SYMLINK+="asmdisks/asmdisk0b",OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd?1", SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$parent", RESULT=="36000c299de58ff92c58a1047b7e1c49b",SYMLINK+="asmdisks/asmdisk0c",OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd?1", SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$parent", RESULT=="36000c2987363035f65954335ae2f037d",SYMLINK+="asmdisks/asmdisk0d",OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd?1", SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$parent", RESULT=="36000c297710422f6013910b628c58aba",SYMLINK+="asmdisks/asmdisk0e",OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd?1", SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$parent", RESULT=="36000c29b4ae1e4707b81900e25e1d64f",SYMLINK+="asmdisks/asmdisk0f",OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd?1", SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$parent", RESULT=="36000c29fc7b65aefc43cc68564abd510",SYMLINK+="asmdisks/asmdisk0g",OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd?1", SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$parent", RESULT=="36000c29d76892df9eed271b8e09ff7ee",SYMLINK+="asmdisks/asmdisk0h",OWNER="grid", GROUP="asmadmin", MODE="0660"

[root@oracle19crac01 ~]#

4.3、读取分区信息

使用fdisk工具只是将分区信息写到磁盘,如果需要mkfs磁盘分区则需要重启系统;而使用partprobe则可以使kernel重新读取分区 信息,从而避免重启系统。

/sbin/partprobe /dev/sdb1

/sbin/partprobe /dev/sdc1

/sbin/partprobe /dev/sdd1

/sbin/partprobe /dev/sde1

/sbin/partprobe /dev/sdf1

/sbin/partprobe /dev/sdg1

/sbin/partprobe /dev/sdh1

4.4、启用udev并查看udev映射磁盘

[root@oracle19crac01 ~]# /sbin/udevadm trigger --type=devices --action=change

[root@oracle19crac01 ~]# ls -alrth /dev/asmdisks/*

lrwxrwxrwx. 1 root root 7 Jul 13 21:22 /dev/asmdisks/asmdisk0e -> ../sde1

lrwxrwxrwx. 1 root root 7 Jul 13 21:22 /dev/asmdisks/asmdisk0c -> ../sdc1

lrwxrwxrwx. 1 root root 7 Jul 13 21:22 /dev/asmdisks/asmdisk0f -> ../sdf1

lrwxrwxrwx. 1 root root 7 Jul 13 21:22 /dev/asmdisks/asmdisk0d -> ../sdd1

lrwxrwxrwx. 1 root root 7 Jul 13 21:22 /dev/asmdisks/asmdisk0h -> ../sdh1

lrwxrwxrwx. 1 root root 7 Jul 13 21:22 /dev/asmdisks/asmdisk0b -> ../sdb1

lrwxrwxrwx. 1 root root 7 Jul 13 21:22 /dev/asmdisks/asmdisk0g -> ../sdg1

[root@oracle19crac01 ~]#

[root@oracle19crac02 ~]# /sbin/udevadm trigger --type=devices --action=change

[root@oracle19crac02 ~]# ls -alrth /dev/asmdisks/*

lrwxrwxrwx. 1 root root 7 Jul 13 21:22 /dev/asmdisks/asmdisk0b -> ../sdb1

lrwxrwxrwx. 1 root root 7 Jul 13 21:22 /dev/asmdisks/asmdisk0f -> ../sdf1

lrwxrwxrwx. 1 root root 7 Jul 13 21:22 /dev/asmdisks/asmdisk0g -> ../sdg1

lrwxrwxrwx. 1 root root 7 Jul 13 21:22 /dev/asmdisks/asmdisk0e -> ../sde1

lrwxrwxrwx. 1 root root 7 Jul 13 21:22 /dev/asmdisks/asmdisk0h -> ../sdh1

lrwxrwxrwx. 1 root root 7 Jul 13 21:22 /dev/asmdisks/asmdisk0d -> ../sdd1

lrwxrwxrwx. 1 root root 7 Jul 13 21:22 /dev/asmdisks/asmdisk0c -> ../sdc1

[root@oracle19crac02 ~]#

然后我们就可以开始安装Grid集群软件了。

5、安装Grid集群软件

我们把grid集群安装包上传到节点1中;在19C中需要把grid包解压放到grid用户下ORACLE_HOME目录内, 解压文件到/u01/app/19.0.0/grid、详细操作如下:

[root@oracle19crac01 oracle19c]# ls

LINUX.X64_193000_db_home.zip LINUX.X64_193000_grid_home.zip

[root@oracle19crac01 oracle19c]# cp LINUX.X64_193000_grid_home.zip /u01/app/19.0.0/grid/

[root@oracle19crac01 oracle19c]# cd /u01/app/19.0.0/grid/

[root@oracle19crac01 grid]# pwd

/u01/app/19.0.0/grid

[root@oracle19crac01 grid]# ls

LINUX.X64_193000_grid_home.zip

[root@oracle19crac01 grid]# chown -R grid:oinstall LINUX.X64_193000_grid_home.zip

[root@oracle19crac01 grid]# su - grid

Last login: Tue Jul 13 20:06:44 CST 2021 on pts/0

$ORACLE_SID: +ASM1

$ORACLE_HOME: /u01/app/19.0.0/grid

grid@oracle19crac01[+ASM1]/home/grid$

grid@oracle19crac01[+ASM1]/home/grid$ll

total 0

grid@oracle19crac01[+ASM1]/home/grid$ls

grid@oracle19crac01[+ASM1]/home/grid$pwd

/home/grid

grid@oracle19crac01[+ASM1]/home/grid$cd /u01/app/19.0.0/grid/

grid@oracle19crac01[+ASM1]/u01/app/19.0.0/grid$ls

LINUX.X64_193000_grid_home.zip

grid@oracle19crac01[+ASM1]/u01/app/19.0.0/grid$ll

total 2821472

-rw-r--r--. 1 grid oinstall 2889184573 Jul 13 21:30 LINUX.X64_193000_grid_home.zip

grid@oracle19crac01[+ASM1]/u01/app/19.0.0/grid$unzip LINUX.X64_193000_grid_home.zip

安装前需要在两节点上安装cvuqdisk-1.0.10-1.x86_64,软件在$ORACLE_HOME/cv/rpm/下。我们切换到root用户:

[root@oracle19crac01 grid]# cd /u01/app/19.0.0/grid/cv/rpm

[root@oracle19crac01 rpm]# ls

cvuqdisk-1.0.10-1.rpm

[root@oracle19crac01 rpm]# rpm -ivh cvuqdisk-1.0.10-1.rpm

Preparing... ################################# [100%]

Using default group oinstall to install package

Updating / installing...

1:cvuqdisk-1.0.10-1 ################################# [100%]

[root@oracle19crac01 rpm]#

5.1、配置SSH互信

# grid用户互信 配置过程中输入grid用户密码(前面设置的为grid)

source ~/.bash_profile

$ORACLE_HOME/oui/prov/resources/scripts/sshUserSetup.sh -user grid -hosts "oracle19crac01 oracle19crac02" -advanced -noPromptPassphrase

# oracle用户互信 配置过程中输入oracle用户密码(前面设置的为oracle)

$ORACLE_HOME/oui/prov/resources/scripts/sshUserSetup.sh -user oracle -hosts "oracle19crac01 oracle19crac02" -advanced -noPromptPassphrase

5.2、安装Grid集群软件

安装前我们还要进行最终的检查、检查无误之后我们就可以开始进行安装了:

grid@oracle19crac01[+ASM1]/u01/app/19.0.0/grid$$ORACLE_HOME/runcluvfy.sh stage -pre crsinst -n "oracle19crac01,oracle19crac02" -verbose

Verifying Physical Memory ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 7.6377GB (8008660.0KB) 8GB (8388608.0KB) passed

oracle19crac01 7.6376GB (8008648.0KB) 8GB (8388608.0KB) passed

Verifying Physical Memory ...PASSED

Verifying Available Physical Memory ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 7.3689GB (7726864.0KB) 50MB (51200.0KB) passed

oracle19crac01 7.0316GB (7373132.0KB) 50MB (51200.0KB) passed

Verifying Available Physical Memory ...PASSED

Verifying Swap Size ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 5GB (5242876.0KB) 7.6377GB (8008660.0KB) failed

oracle19crac01 5GB (5242876.0KB) 7.6376GB (8008648.0KB) failed

Verifying Swap Size ...FAILED (PRVF-7573)

Verifying Free Space: oracle19crac02:/usr,oracle19crac02:/var,oracle19crac02:/etc,oracle19crac02:/sbin,oracle19crac02:/tmp ...

Path Node Name Mount point Available Required Status

---------------- ------------ ------------ ------------ ------------ ------------

/usr oracle19crac02 / 44.6309GB 25MB passed

/var oracle19crac02 / 44.6309GB 5MB passed

/etc oracle19crac02 / 44.6309GB 25MB passed

/sbin oracle19crac02 / 44.6309GB 10MB passed

/tmp oracle19crac02 / 44.6309GB 1GB passed

Verifying Free Space: oracle19crac02:/usr,oracle19crac02:/var,oracle19crac02:/etc,oracle19crac02:/sbin,oracle19crac02:/tmp ...PASSED

Verifying Free Space: oracle19crac01:/usr,oracle19crac01:/var,oracle19crac01:/etc,oracle19crac01:/sbin,oracle19crac01:/tmp ...

Path Node Name Mount point Available Required Status

---------------- ------------ ------------ ------------ ------------ ------------

/usr oracle19crac01 / 21.6578GB 25MB passed

/var oracle19crac01 / 21.6578GB 5MB passed

/etc oracle19crac01 / 21.6578GB 25MB passed

/sbin oracle19crac01 / 21.6578GB 10MB passed

/tmp oracle19crac01 / 21.6578GB 1GB passed

Verifying Free Space: oracle19crac01:/usr,oracle19crac01:/var,oracle19crac01:/etc,oracle19crac01:/sbin,oracle19crac01:/tmp ...PASSED

Verifying User Existence: grid ...

Node Name Status Comment

------------ ------------------------ ------------------------

oracle19crac02 passed exists(54322)

oracle19crac01 passed exists(54322)

Verifying Users With Same UID: 54322 ...PASSED

Verifying User Existence: grid ...PASSED

Verifying Group Existence: asmadmin ...

Node Name Status Comment

------------ ------------------------ ------------------------

oracle19crac02 passed exists

oracle19crac01 passed exists

Verifying Group Existence: asmadmin ...PASSED

Verifying Group Existence: asmdba ...

Node Name Status Comment

------------ ------------------------ ------------------------

oracle19crac02 passed exists

oracle19crac01 passed exists

Verifying Group Existence: asmdba ...PASSED

Verifying Group Existence: oinstall ...

Node Name Status Comment

------------ ------------------------ ------------------------

oracle19crac02 passed exists

oracle19crac01 passed exists

Verifying Group Existence: oinstall ...PASSED

Verifying Group Membership: asmdba ...

Node Name User Exists Group Exists User in Group Status

---------------- ------------ ------------ ------------ ----------------

oracle19crac02 yes yes yes passed

oracle19crac01 yes yes yes passed

Verifying Group Membership: asmdba ...PASSED

Verifying Group Membership: asmadmin ...

Node Name User Exists Group Exists User in Group Status

---------------- ------------ ------------ ------------ ----------------

oracle19crac02 yes yes yes passed

oracle19crac01 yes yes yes passed

Verifying Group Membership: asmadmin ...PASSED

Verifying Group Membership: oinstall(Primary) ...

Node Name User Exists Group Exists User in Group Primary Status

---------------- ------------ ------------ ------------ ------------ ------------

oracle19crac02 yes yes yes yes passed

oracle19crac01 yes yes yes yes passed

Verifying Group Membership: oinstall(Primary) ...PASSED

Verifying Run Level ...

Node Name run level Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 3 3,5 passed

oracle19crac01 3 3,5 passed

Verifying Run Level ...PASSED

Verifying Hard Limit: maximum open file descriptors ...

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

oracle19crac02 hard 65536 65536 passed

oracle19crac01 hard 65536 65536 passed

Verifying Hard Limit: maximum open file descriptors ...PASSED

Verifying Soft Limit: maximum open file descriptors ...

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

oracle19crac02 soft 1024 1024 passed

oracle19crac01 soft 1024 1024 passed

Verifying Soft Limit: maximum open file descriptors ...PASSED

Verifying Hard Limit: maximum user processes ...

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

oracle19crac02 hard 16384 16384 passed

oracle19crac01 hard 16384 16384 passed

Verifying Hard Limit: maximum user processes ...PASSED

Verifying Soft Limit: maximum user processes ...

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

oracle19crac02 soft 16384 2047 passed

oracle19crac01 soft 16384 2047 passed

Verifying Soft Limit: maximum user processes ...PASSED

Verifying Soft Limit: maximum stack size ...

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

oracle19crac02 soft 10240 10240 passed

oracle19crac01 soft 10240 10240 passed

Verifying Soft Limit: maximum stack size ...PASSED

Verifying Architecture ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 x86_64 x86_64 passed

oracle19crac01 x86_64 x86_64 passed

Verifying Architecture ...PASSED

Verifying OS Kernel Version ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 3.10.0-957.el7.x86_64 3.10.0 passed

oracle19crac01 3.10.0-957.el7.x86_64 3.10.0 passed

Verifying OS Kernel Version ...PASSED

Verifying OS Kernel Parameter: semmsl ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

oracle19crac01 250 250 250 passed

oracle19crac02 250 250 250 passed

Verifying OS Kernel Parameter: semmsl ...PASSED

Verifying OS Kernel Parameter: semmns ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

oracle19crac01 32000 32000 32000 passed

oracle19crac02 32000 32000 32000 passed

Verifying OS Kernel Parameter: semmns ...PASSED

Verifying OS Kernel Parameter: semopm ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

oracle19crac01 100 100 100 passed

oracle19crac02 100 100 100 passed

Verifying OS Kernel Parameter: semopm ...PASSED

Verifying OS Kernel Parameter: semmni ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

oracle19crac01 128 128 128 passed

oracle19crac02 128 128 128 passed

Verifying OS Kernel Parameter: semmni ...PASSED

Verifying OS Kernel Parameter: shmmax ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

oracle19crac01 5798205849 5798205849 4100427776 passed

oracle19crac02 5798205849 5798205849 4100433920 passed

Verifying OS Kernel Parameter: shmmax ...PASSED

Verifying OS Kernel Parameter: shmmni ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

oracle19crac01 4096 4096 4096 passed

oracle19crac02 4096 4096 4096 passed

Verifying OS Kernel Parameter: shmmni ...PASSED

Verifying OS Kernel Parameter: shmall ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

oracle19crac01 1415577 1415577 1415577 passed

oracle19crac02 1415577 1415577 1415577 passed

Verifying OS Kernel Parameter: shmall ...PASSED

Verifying OS Kernel Parameter: file-max ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

oracle19crac01 6815744 6815744 6815744 passed

oracle19crac02 6815744 6815744 6815744 passed

Verifying OS Kernel Parameter: file-max ...PASSED

Verifying OS Kernel Parameter: ip_local_port_range ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

oracle19crac01 between 9000 & 65500 between 9000 & 65500 between 9000 & 65535 passed

oracle19crac02 between 9000 & 65500 between 9000 & 65500 between 9000 & 65535 passed

Verifying OS Kernel Parameter: ip_local_port_range ...PASSED

Verifying OS Kernel Parameter: rmem_default ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

oracle19crac01 262144 262144 262144 passed

oracle19crac02 262144 262144 262144 passed

Verifying OS Kernel Parameter: rmem_default ...PASSED

Verifying OS Kernel Parameter: rmem_max ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

oracle19crac01 4194304 4194304 4194304 passed

oracle19crac02 4194304 4194304 4194304 passed

Verifying OS Kernel Parameter: rmem_max ...PASSED

Verifying OS Kernel Parameter: wmem_default ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

oracle19crac01 262144 262144 262144 passed

oracle19crac02 262144 262144 262144 passed

Verifying OS Kernel Parameter: wmem_default ...PASSED

Verifying OS Kernel Parameter: wmem_max ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

oracle19crac01 4194304 4194304 1048576 passed

oracle19crac02 4194304 4194304 1048576 passed

Verifying OS Kernel Parameter: wmem_max ...PASSED

Verifying OS Kernel Parameter: aio-max-nr ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

oracle19crac01 4194304 4194304 1048576 passed

oracle19crac02 4194304 4194304 1048576 passed

Verifying OS Kernel Parameter: aio-max-nr ...PASSED

Verifying Package: kmod-20-21 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 kmod(x86_64)-20-23.el7 kmod(x86_64)-20-21 passed

oracle19crac01 kmod(x86_64)-20-23.el7 kmod(x86_64)-20-21 passed

Verifying Package: kmod-20-21 (x86_64) ...PASSED

Verifying Package: kmod-libs-20-21 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 kmod-libs(x86_64)-20-23.el7 kmod-libs(x86_64)-20-21 passed

oracle19crac01 kmod-libs(x86_64)-20-23.el7 kmod-libs(x86_64)-20-21 passed

Verifying Package: kmod-libs-20-21 (x86_64) ...PASSED

Verifying Package: binutils-2.23.52.0.1 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 binutils-2.27-44.base.el7 binutils-2.23.52.0.1 passed

oracle19crac01 binutils-2.27-44.base.el7 binutils-2.23.52.0.1 passed

Verifying Package: binutils-2.23.52.0.1 ...PASSED

Verifying Package: compat-libcap1-1.10 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 compat-libcap1-1.10-7.el7 compat-libcap1-1.10 passed

oracle19crac01 compat-libcap1-1.10-7.el7 compat-libcap1-1.10 passed

Verifying Package: compat-libcap1-1.10 ...PASSED

Verifying Package: libgcc-4.8.2 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 libgcc(x86_64)-4.8.5-44.el7 libgcc(x86_64)-4.8.2 passed

oracle19crac01 libgcc(x86_64)-4.8.5-44.el7 libgcc(x86_64)-4.8.2 passed

Verifying Package: libgcc-4.8.2 (x86_64) ...PASSED

Verifying Package: libstdc++-4.8.2 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 libstdc++(x86_64)-4.8.5-44.el7 libstdc++(x86_64)-4.8.2 passed

oracle19crac01 libstdc++(x86_64)-4.8.5-44.el7 libstdc++(x86_64)-4.8.2 passed

Verifying Package: libstdc++-4.8.2 (x86_64) ...PASSED

Verifying Package: libstdc++-devel-4.8.2 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 libstdc++-devel(x86_64)-4.8.5-44.el7 libstdc++-devel(x86_64)-4.8.2 passed

oracle19crac01 libstdc++-devel(x86_64)-4.8.5-44.el7 libstdc++-devel(x86_64)-4.8.2 passed

Verifying Package: libstdc++-devel-4.8.2 (x86_64) ...PASSED

Verifying Package: sysstat-10.1.5 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 sysstat-10.1.5-19.el7 sysstat-10.1.5 passed

oracle19crac01 sysstat-10.1.5-19.el7 sysstat-10.1.5 passed

Verifying Package: sysstat-10.1.5 ...PASSED

Verifying Package: gcc-c++-4.8.2 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 gcc-c++-4.8.5-44.el7 gcc-c++-4.8.2 passed

oracle19crac01 gcc-c++-4.8.5-44.el7 gcc-c++-4.8.2 passed

Verifying Package: gcc-c++-4.8.2 ...PASSED

Verifying Package: ksh ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 ksh ksh passed

oracle19crac01 ksh ksh passed

Verifying Package: ksh ...PASSED

Verifying Package: make-3.82 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 make-3.82-24.el7 make-3.82 passed

oracle19crac01 make-3.82-24.el7 make-3.82 passed

Verifying Package: make-3.82 ...PASSED

Verifying Package: glibc-2.17 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 glibc(x86_64)-2.17-324.el7_9 glibc(x86_64)-2.17 passed

oracle19crac01 glibc(x86_64)-2.17-324.el7_9 glibc(x86_64)-2.17 passed

Verifying Package: glibc-2.17 (x86_64) ...PASSED

Verifying Package: glibc-devel-2.17 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 glibc-devel(x86_64)-2.17-324.el7_9 glibc-devel(x86_64)-2.17 passed

oracle19crac01 glibc-devel(x86_64)-2.17-324.el7_9 glibc-devel(x86_64)-2.17 passed

Verifying Package: glibc-devel-2.17 (x86_64) ...PASSED

Verifying Package: libaio-0.3.109 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 libaio(x86_64)-0.3.109-13.el7 libaio(x86_64)-0.3.109 passed

oracle19crac01 libaio(x86_64)-0.3.109-13.el7 libaio(x86_64)-0.3.109 passed

Verifying Package: libaio-0.3.109 (x86_64) ...PASSED

Verifying Package: libaio-devel-0.3.109 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 libaio-devel(x86_64)-0.3.109-13.el7 libaio-devel(x86_64)-0.3.109 passed

oracle19crac01 libaio-devel(x86_64)-0.3.109-13.el7 libaio-devel(x86_64)-0.3.109 passed

Verifying Package: libaio-devel-0.3.109 (x86_64) ...PASSED

Verifying Package: nfs-utils-1.2.3-15 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 nfs-utils-1.3.0-0.68.el7 nfs-utils-1.2.3-15 passed

oracle19crac01 nfs-utils-1.3.0-0.68.el7 nfs-utils-1.2.3-15 passed

Verifying Package: nfs-utils-1.2.3-15 ...PASSED

Verifying Package: smartmontools-6.2-4 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 smartmontools-7.0-2.el7 smartmontools-6.2-4 passed

oracle19crac01 smartmontools-7.0-2.el7 smartmontools-6.2-4 passed

Verifying Package: smartmontools-6.2-4 ...PASSED

Verifying Package: net-tools-2.0-0.17 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 net-tools-2.0-0.25.20131004git.el7 net-tools-2.0-0.17 passed

oracle19crac01 net-tools-2.0-0.25.20131004git.el7 net-tools-2.0-0.17 passed

Verifying Package: net-tools-2.0-0.17 ...PASSED

Verifying Package: compat-libstdc++-33-3.2.3 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 compat-libstdc++-33(x86_64)-3.2.3-72.el7 compat-libstdc++-33(x86_64)-3.2.3 passed

oracle19crac01 compat-libstdc++-33(x86_64)-3.2.3-72.el7 compat-libstdc++-33(x86_64)-3.2.3 passed

Verifying Package: compat-libstdc++-33-3.2.3 (x86_64) ...PASSED

Verifying Package: libxcb-1.11 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 libxcb(x86_64)-1.13-1.el7 libxcb(x86_64)-1.11 passed

oracle19crac01 libxcb(x86_64)-1.13-1.el7 libxcb(x86_64)-1.11 passed

Verifying Package: libxcb-1.11 (x86_64) ...PASSED

Verifying Package: libX11-1.6.3 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 libX11(x86_64)-1.6.7-3.el7_9 libX11(x86_64)-1.6.3 passed

oracle19crac01 libX11(x86_64)-1.6.7-3.el7_9 libX11(x86_64)-1.6.3 passed

Verifying Package: libX11-1.6.3 (x86_64) ...PASSED

Verifying Package: libXau-1.0.8 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 libXau(x86_64)-1.0.8-2.1.el7 libXau(x86_64)-1.0.8 passed

oracle19crac01 libXau(x86_64)-1.0.8-2.1.el7 libXau(x86_64)-1.0.8 passed

Verifying Package: libXau-1.0.8 (x86_64) ...PASSED

Verifying Package: libXi-1.7.4 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 libXi(x86_64)-1.7.9-1.el7 libXi(x86_64)-1.7.4 passed

oracle19crac01 libXi(x86_64)-1.7.9-1.el7 libXi(x86_64)-1.7.4 passed

Verifying Package: libXi-1.7.4 (x86_64) ...PASSED

Verifying Package: libXtst-1.2.2 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 libXtst(x86_64)-1.2.3-1.el7 libXtst(x86_64)-1.2.2 passed

oracle19crac01 libXtst(x86_64)-1.2.3-1.el7 libXtst(x86_64)-1.2.2 passed

Verifying Package: libXtst-1.2.2 (x86_64) ...PASSED

Verifying Port Availability for component "Oracle Notification Service (ONS)" ...

Node Name Port Number Protocol Available Status

---------------- ------------ ------------ ------------ ----------------

oracle19crac02 6200 TCP yes successful

oracle19crac01 6200 TCP yes successful

oracle19crac02 6100 TCP yes successful

oracle19crac01 6100 TCP yes successful

Verifying Port Availability for component "Oracle Notification Service (ONS)" ...PASSED

Verifying Port Availability for component "Oracle Cluster Synchronization Services (CSSD)" ...

Node Name Port Number Protocol Available Status

---------------- ------------ ------------ ------------ ----------------

oracle19crac02 42424 TCP yes successful

oracle19crac01 42424 TCP yes successful

Verifying Port Availability for component "Oracle Cluster Synchronization Services (CSSD)" ...PASSED

Verifying Users With Same UID: 0 ...PASSED

Verifying Current Group ID ...PASSED

Verifying Root user consistency ...

Node Name Status

------------------------------------ ------------------------

oracle19crac02 passed

oracle19crac01 passed

Verifying Root user consistency ...PASSED

Verifying Package: cvuqdisk-1.0.10-1 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 cvuqdisk-1.0.10-1 cvuqdisk-1.0.10-1 passed

oracle19crac01 cvuqdisk-1.0.10-1 cvuqdisk-1.0.10-1 passed

Verifying Package: cvuqdisk-1.0.10-1 ...PASSED

Verifying Host name ...PASSED

Verifying Node Connectivity ...

Verifying Hosts File ...

Node Name Status

------------------------------------ ------------------------

oracle19crac01 passed

oracle19crac02 passed

Verifying Hosts File ...PASSED

Interface information for node "oracle19crac01"

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------ --------------- --------------- --------------- --------------- ----------------- ------

ens160 172.16.200.21 172.16.200.0 0.0.0.0 172.16.200.254 00:0C:29:7E:05:83 1500

ens192 192.168.0.21 192.168.0.0 0.0.0.0 172.16.200.254 00:0C:29:7E:05:8D 1500

Interface information for node "oracle19crac02"

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------ --------------- --------------- --------------- --------------- ----------------- ------

ens160 172.16.200.22 172.16.200.0 0.0.0.0 172.16.200.254 00:0C:29:A2:59:2E 1500

ens192 192.168.0.22 192.168.0.0 0.0.0.0 172.16.200.254 00:0C:29:A2:59:38 1500

Check: MTU consistency of the subnet "192.168.0.0".

Node Name IP Address Subnet MTU

---------------- ------------ ------------ ------------ ----------------

oracle19crac01 ens192 192.168.0.21 192.168.0.0 1500

oracle19crac02 ens192 192.168.0.22 192.168.0.0 1500

Check: MTU consistency of the subnet "172.16.200.0".

Node Name IP Address Subnet MTU

---------------- ------------ ------------ ------------ ----------------

oracle19crac01 ens160 172.16.200.21 172.16.200.0 1500

oracle19crac02 ens160 172.16.200.22 172.16.200.0 1500

Verifying Check that maximum (MTU) size packet goes through subnet ...PASSED

Source Destination Connected?

------------------------------ ------------------------------ ----------------

oracle19crac01[ens192:192.168.0.21] oracle19crac02[ens192:192.168.0.22] yes

Source Destination Connected?

------------------------------ ------------------------------ ----------------

oracle19crac01[ens160:172.16.200.21] oracle19crac02[ens160:172.16.200.22] yes

Verifying subnet mask consistency for subnet "192.168.0.0" ...PASSED

Verifying subnet mask consistency for subnet "172.16.200.0" ...PASSED

Verifying Node Connectivity ...PASSED

Verifying Multicast or broadcast check ...

Checking subnet "192.168.0.0" for multicast communication with multicast group "224.0.0.251"

Verifying Multicast or broadcast check ...PASSED

Verifying Network Time Protocol (NTP) ...PASSED

Verifying Same core file name pattern ...PASSED

Verifying User Mask ...

Node Name Available Required Comment

------------ ------------------------ ------------------------ ----------

oracle19crac02 0022 0022 passed

oracle19crac01 0022 0022 passed

Verifying User Mask ...PASSED

Verifying User Not In Group "root": grid ...

Node Name Status Comment

------------ ------------------------ ------------------------

oracle19crac02 passed does not exist

oracle19crac01 passed does not exist

Verifying User Not In Group "root": grid ...PASSED

Verifying Time zone consistency ...PASSED

Verifying Time offset between nodes ...PASSED

Verifying resolv.conf Integrity ...

Node Name Status

------------------------------------ ------------------------

oracle19crac01 passed

oracle19crac02 passed

checking response for name "oracle19crac02" from each of the name servers

specified in "/etc/resolv.conf"

Node Name Source Comment Status

------------ ------------------------ ------------------------ ----------

oracle19crac02 114.114.114.114 IPv4 passed

oracle19crac02 8.8.8.8 IPv4 passed

checking response for name "oracle19crac01" from each of the name servers

specified in "/etc/resolv.conf"

Node Name Source Comment Status

------------ ------------------------ ------------------------ ----------

oracle19crac01 114.114.114.114 IPv4 passed

oracle19crac01 8.8.8.8 IPv4 passed

Verifying resolv.conf Integrity ...PASSED

Verifying DNS/NIS name service ...PASSED

Verifying Domain Sockets ...PASSED

Verifying /boot mount ...PASSED

Verifying Daemon "avahi-daemon" not configured and running ...

Node Name Configured Status

------------ ------------------------ ------------------------

oracle19crac02 no passed

oracle19crac01 no passed

Node Name Running? Status

------------ ------------------------ ------------------------

oracle19crac02 no passed

oracle19crac01 no passed

Verifying Daemon "avahi-daemon" not configured and running ...PASSED

Verifying Daemon "proxyt" not configured and running ...

Node Name Configured Status

------------ ------------------------ ------------------------

oracle19crac02 no passed

oracle19crac01 no passed

Node Name Running? Status

------------ ------------------------ ------------------------

oracle19crac02 no passed

oracle19crac01 no passed

Verifying Daemon "proxyt" not configured and running ...PASSED

Verifying User Equivalence ...PASSED

Verifying RPM Package Manager database ...INFORMATION (PRVG-11250)

Verifying /dev/shm mounted as temporary file system ...PASSED

Verifying File system mount options for path /var ...PASSED

Verifying DefaultTasksMax parameter ...PASSED

Verifying zeroconf check ...PASSED

Verifying ASM Filter Driver configuration ...PASSED

Pre-check for cluster services setup was unsuccessful on all the nodes.

Failures were encountered during execution of CVU verification request "stage -pre crsinst".

Verifying Swap Size ...FAILED

oracle19crac02: PRVF-7573 : Sufficient swap size is not available on node

"oracle19crac02" [Required = 7.6377GB (8008660.0KB) ; Found =

5GB (5242876.0KB)]

oracle19crac01: PRVF-7573 : Sufficient swap size is not available on node

"oracle19crac01" [Required = 7.6376GB (8008648.0KB) ; Found =

5GB (5242876.0KB)]

Verifying RPM Package Manager database ...INFORMATION

PRVG-11250 : The check "RPM Package Manager database" was not performed because

it needs 'root' user privileges.

CVU operation performed: stage -pre crsinst

Date: Jul 13, 2021 11:00:15 PM

CVU home: /u01/app/19.0.0/grid/

User: grid

grid@oracle19crac01[+ASM1]/u01/app/19.0.0/grid$

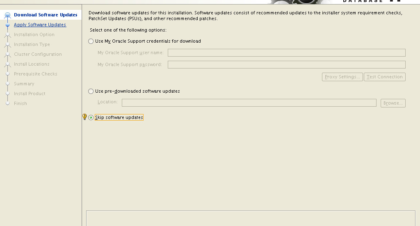

我这边检查出两个问题、一个是swap交换分区的问题;我们直接忽略掉这个问题、开始安装grid集群。

su - grid

${ORACLE_HOME}/gridSetup.sh -ignorePrereq -waitforcompletion -silent \

-responseFile ${ORACLE_HOME}/install/response/gridsetup.rsp \

INVENTORY_LOCATION=/u01/app/oraInventory \

SELECTED_LANGUAGES=en,en_GB \

oracle.install.option=CRS_CONFIG \

ORACLE_BASE=/u01/app/grid \

oracle.install.asm.OSDBA=asmdba \

oracle.install.asm.OSASM=asmadmin \

oracle.install.asm.OSOPER=asmoper \

oracle.install.crs.config.scanType=LOCAL_SCAN \

# 修改clusterName

oracle.install.crs.config.clusterName=racdb \

# 修改scanName

oracle.install.crs.config.gpnp.scanName=oracle19crac-scan \

oracle.install.crs.config.gpnp.scanPort=1521 \

oracle.install.crs.config.ClusterConfiguration=STANDALONE \

oracle.install.crs.config.configureAsExtendedCluster=false \

oracle.install.crs.config.gpnp.configureGNS=false \

oracle.install.crs.config.autoConfigureClusterNodeVIP=false \

# 修改主机名称和clusterNodes

oracle.install.crs.config.clusterNodes=oracle19crac01:oracle19crac01-vip:HUB,oracle19crac02:oracle19crac02-vip:HUB \

# 修改网卡信息

oracle.install.crs.config.networkInterfaceList=ens160:172.16.200.0:1,ens192:192.168.0.0:5 \

oracle.install.asm.configureGIMRDataDG=false \

oracle.install.crs.config.useIPMI=false \

oracle.install.asm.storageOption=ASM \

oracle.install.asmOnNAS.configureGIMRDataDG=false \

# 修改密码

oracle.install.asm.SYSASMPassword=oracle \

oracle.install.asm.diskGroup.name=SYSDG \

oracle.install.asm.diskGroup.redundancy=NORMAL \

oracle.install.asm.diskGroup.AUSize=4 \

oracle.install.asm.diskGroup.disksWithFailureGroupNames=/dev/asmdisks/asmdisk0b,,/dev/asmdisks/asmdisk0c,,/dev/asmdisks/asmdisk0d, \

oracle.install.asm.diskGroup.disks=/dev/asmdisks/asmdisk0b,/dev/asmdisks/asmdisk0c,/dev/asmdisks/asmdisk0d \

oracle.install.asm.diskGroup.diskDiscoveryString=/dev/asmdisks/* \

oracle.install.asm.configureAFD=false \

# 修改密码

oracle.install.asm.monitorPassword=oracle \

oracle.install.crs.configureRHPS=false \

oracle.install.crs.config.ignoreDownNodes=false \

oracle.install.config.managementOption=NONE \

oracle.install.config.omsPort=0 \

oracle.install.crs.rootconfig.executeRootScript=false

我们直接执行上面的安装命令进行安装(各位小伙伴按照你的实际情况来修改上面的部署参数):

grid@oracle19crac01[+ASM1]/u01/app$${ORACLE_HOME}/gridSetup.sh -ignorePrereq -waitforcompletion -silent \

> -responseFile ${ORACLE_HOME}/install/response/gridsetup.rsp \

> INVENTORY_LOCATION=/u01/app/oraInventory \

> SELECTED_LANGUAGES=en,en_GB \

> oracle.install.option=CRS_CONFIG \

> ORACLE_BASE=/u01/app/grid \

> oracle.install.asm.OSDBA=asmdba \

> oracle.install.asm.OSASM=asmadmin \

> oracle.install.asm.OSOPER=asmoper \

> oracle.install.crs.config.scanType=LOCAL_SCAN \

> oracle.install.crs.config.clusterName=racdb \

> oracle.install.crs.config.gpnp.scanName=oracle19crac-scan \

> oracle.install.crs.config.gpnp.scanPort=1521 \

> oracle.install.crs.config.ClusterConfiguration=STANDALONE \

> oracle.install.crs.config.configureAsExtendedCluster=false \

> oracle.install.crs.config.gpnp.configureGNS=false \

> oracle.install.crs.config.autoConfigureClusterNodeVIP=false \

> oracle.install.crs.config.clusterNodes=oracle19crac01:oracle19crac01-vip:HUB,oracle19crac02:oracle19crac02-vip:HUB \

> oracle.install.crs.config.networkInterfaceList=ens160:172.16.200.0:1,ens192:192.168.0.0:5 \

> oracle.install.asm.configureGIMRDataDG=false \

> oracle.install.crs.config.useIPMI=false \

> oracle.install.asm.storageOption=ASM \

> oracle.install.asmOnNAS.configureGIMRDataDG=false \

> oracle.install.asm.SYSASMPassword=hbxy@zk_r00t \

> oracle.install.asm.diskGroup.name=SYSDG \

> oracle.install.asm.diskGroup.redundancy=NORMAL \

> oracle.install.asm.diskGroup.AUSize=4 \

> oracle.install.asm.diskGroup.disksWithFailureGroupNames=/dev/asmdisks/asmdisk0b,,/dev/asmdisks/asmdisk0c,,/dev/asmdisks/asmdisk0d, \

> oracle.install.asm.diskGroup.disks=/dev/asmdisks/asmdisk0b,/dev/asmdisks/asmdisk0c,/dev/asmdisks/asmdisk0d \

> oracle.install.asm.diskGroup.diskDiscoveryString=/dev/asmdisks/* \

> oracle.install.asm.configureAFD=false \

> oracle.install.asm.monitorPassword=hbxy@zk_r00t \

> oracle.install.crs.configureRHPS=false \

> oracle.install.crs.config.ignoreDownNodes=false \

> oracle.install.config.managementOption=NONE \

> oracle.install.config.omsPort=0 \

> oracle.install.crs.rootconfig.executeRootScript=false

Launching Oracle Grid Infrastructure Setup Wizard...

[WARNING] [INS-30011] The SYS password entered does not conform to the Oracle recommended standards.

CAUSE: Oracle recommends that the password entered should be at least 8 characters in length, contain at least 1 uppercase character, 1 lower case character and 1 digit [0-9].

ACTION: Provide a password that conforms to the Oracle recommended standards.

[WARNING] [INS-30011] The ASMSNMP password entered does not conform to the Oracle recommended standards.

CAUSE: Oracle recommends that the password entered should be at least 8 characters in length, contain at least 1 uppercase character, 1 lower case character and 1 digit [0-9].

ACTION: Provide a password that conforms to the Oracle recommended standards.

[WARNING] [INS-40109] The specified Oracle Base location is not empty on this server.

ACTION: Specify an empty location for Oracle Base.

[WARNING] [INS-13013] Target environment does not meet some mandatory requirements.

CAUSE: Some of the mandatory prerequisites are not met. See logs for details. /tmp/GridSetupActions2021-07-13_11-37-56PM/gridSetupActions2021-07-13_11-37-56PM.log

ACTION: Identify the list of failed prerequisite checks from the log: /tmp/GridSetupActions2021-07-13_11-37-56PM/gridSetupActions2021-07-13_11-37-56PM.log. Then either from the log file or from installation manual find the appropriate configuration to meet the prerequisites and fix it manually.

The response file for this session can be found at:

/u01/app/19.0.0/grid/install/response/grid_2021-07-13_11-37-56PM.rsp

You can find the log of this install session at:

/tmp/GridSetupActions2021-07-13_11-37-56PM/gridSetupActions2021-07-13_11-37-56PM.log

As a root user, execute the following script(s):

1. /u01/app/oraInventory/orainstRoot.sh

2. /u01/app/19.0.0/grid/root.sh

Execute /u01/app/oraInventory/orainstRoot.sh on the following nodes:

[oracle19crac01, oracle19crac02]

Execute /u01/app/19.0.0/grid/root.sh on the following nodes:

[oracle19crac01, oracle19crac02]

Run the script on the local node first. After successful completion, you can start the script in parallel on all other nodes.

Successfully Setup Software with warning(s).

As install user, execute the following command to complete the configuration.

/u01/app/19.0.0/grid/gridSetup.sh -executeConfigTools -responseFile /u01/app/19.0.0/grid/install/response/gridsetup.rsp [-silent]

Moved the install session logs to:

/u01/app/oraInventory/logs/GridSetupActions2021-07-13_11-37-56PM

grid@oracle19crac01[+ASM1]/u01/app$

grid@oracle19crac01[+ASM1]/u01/app$

注:上面有几个报错我们可以先忽略掉;如果出现Successfully Setup Software with warning(s).就代表Grid集群基本部署完成、但是我们还需要继续完成后面的操作。依次在节点一和节点二上执行/u01/app/oraInventory/orainstRoot.sh。

[root@oracle19crac01 ~]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@oracle19crac02 ~]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

然后执行(按照顺序执行,每个节点执行完了执行另外一个节点,执行root.sh时间比较长)

[root@oracle19crac01 ~]# /u01/app/19.0.0/grid/root.sh

Check /u01/app/19.0.0/grid/install/root_oracle19crac01_2021-07-13_23-52-16-690504988.log for the output of root script

[root@oracle19crac01 ~]#

我们可以新打开一个窗口可查看root.sh执行时候的输出日志:

[root@oracle19crac01 ~]# tail -100f /u01/app/19.0.0/grid/install/root_oracle19crac01_2021-07-13_23-52-16-690504988.log

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/19.0.0/grid

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /u01/app/19.0.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/grid/crsdata/oracle19crac01/crsconfig/rootcrs_oracle19crac01_2021-07-13_11-52-38PM.log

2021/07/13 23:52:56 CLSRSC-594: Executing installation step 1 of 19: 'SetupTFA'.

2021/07/13 23:52:56 CLSRSC-594: Executing installation step 2 of 19: 'ValidateEnv'.

2021/07/13 23:52:56 CLSRSC-363: User ignored prerequisites during installation

2021/07/13 23:52:56 CLSRSC-594: Executing installation step 3 of 19: 'CheckFirstNode'.

2021/07/13 23:53:00 CLSRSC-594: Executing installation step 4 of 19: 'GenSiteGUIDs'.

2021/07/13 23:53:01 CLSRSC-594: Executing installation step 5 of 19: 'SetupOSD'.

2021/07/13 23:53:01 CLSRSC-594: Executing installation step 6 of 19: 'CheckCRSConfig'.

2021/07/13 23:53:02 CLSRSC-594: Executing installation step 7 of 19: 'SetupLocalGPNP'.

2021/07/13 23:53:26 CLSRSC-594: Executing installation step 8 of 19: 'CreateRootCert'.

2021/07/13 23:53:33 CLSRSC-594: Executing installation step 9 of 19: 'ConfigOLR'.

2021/07/13 23:53:34 CLSRSC-4002: Successfully installed Oracle Trace File Analyzer (TFA) Collector.

2021/07/13 23:53:55 CLSRSC-594: Executing installation step 10 of 19: 'ConfigCHMOS'.

2021/07/13 23:53:55 CLSRSC-594: Executing installation step 11 of 19: 'CreateOHASD'.

2021/07/13 23:54:05 CLSRSC-594: Executing installation step 12 of 19: 'ConfigOHASD'.

2021/07/13 23:54:05 CLSRSC-330: Adding Clusterware entries to file 'oracle-ohasd.service'

2021/07/13 23:54:37 CLSRSC-594: Executing installation step 13 of 19: 'InstallAFD'.

2021/07/13 23:54:48 CLSRSC-594: Executing installation step 14 of 19: 'InstallACFS'.

2021/07/13 23:54:58 CLSRSC-594: Executing installation step 15 of 19: 'InstallKA'.

2021/07/13 23:55:08 CLSRSC-594: Executing installation step 16 of 19: 'InitConfig'.

ASM has been created and started successfully.

[DBT-30001] Disk groups created successfully. Check /u01/app/grid/cfgtoollogs/asmca/asmca-210713PM115544.log for details.

2021/07/13 23:56:38 CLSRSC-482: Running command: '/u01/app/19.0.0/grid/bin/ocrconfig -upgrade grid oinstall'

CRS-4256: Updating the profile

Successful addition of voting disk b59ce18f93a64fdcbf425a59bb84e8f6.

Successful addition of voting disk e4afa6480cbd4fc3bf31499b32a87a89.

Successful addition of voting disk 386c716da04f4f66bfc4ca43f4e95dd0.

Successfully replaced voting disk group with +SYSDG.

CRS-4256: Updating the profile

CRS-4266: Voting file(s) successfully replaced

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE b59ce18f93a64fdcbf425a59bb84e8f6 (/dev/asmdisks/asmdisk0c) [SYSDG]

2. ONLINE e4afa6480cbd4fc3bf31499b32a87a89 (/dev/asmdisks/asmdisk0d) [SYSDG]

3. ONLINE 386c716da04f4f66bfc4ca43f4e95dd0 (/dev/asmdisks/asmdisk0b) [SYSDG]

Located 3 voting disk(s).

2021/07/13 23:58:36 CLSRSC-594: Executing installation step 17 of 19: 'StartCluster'.

2021/07/13 23:59:51 CLSRSC-343: Successfully started Oracle Clusterware stack

2021/07/13 23:59:51 CLSRSC-594: Executing installation step 18 of 19: 'ConfigNode'.

2021/07/14 00:01:51 CLSRSC-594: Executing installation step 19 of 19: 'PostConfig'.

2021/07/14 00:02:31 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded

看到succeeded说明root.sh脚本执行没有问题,接下来在节点二执行root.sh:

[root@oracle19crac02 app]# /u01/app/19.0.0/grid/root.sh

Check /u01/app/19.0.0/grid/install/root_oracle19crac02_2021-07-14_00-02-56-274031535.log for the output of root script

[root@oracle19crac02 app]#

我们还是在新窗口查看输出日志:

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/19.0.0/grid

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /u01/app/19.0.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/grid/crsdata/oracle19crac02/crsconfig/rootcrs_oracle19crac02_2021-07-14_00-03-19AM.log

2021/07/14 00:03:29 CLSRSC-594: Executing installation step 1 of 19: 'SetupTFA'.

2021/07/14 00:03:29 CLSRSC-594: Executing installation step 2 of 19: 'ValidateEnv'.

2021/07/14 00:03:29 CLSRSC-363: User ignored prerequisites during installation

2021/07/14 00:03:29 CLSRSC-594: Executing installation step 3 of 19: 'CheckFirstNode'.

2021/07/14 00:03:31 CLSRSC-594: Executing installation step 4 of 19: 'GenSiteGUIDs'.

2021/07/14 00:03:31 CLSRSC-594: Executing installation step 5 of 19: 'SetupOSD'.

2021/07/14 00:03:32 CLSRSC-594: Executing installation step 6 of 19: 'CheckCRSConfig'.

2021/07/14 00:03:32 CLSRSC-594: Executing installation step 7 of 19: 'SetupLocalGPNP'.

2021/07/14 00:03:34 CLSRSC-594: Executing installation step 8 of 19: 'CreateRootCert'.

2021/07/14 00:03:35 CLSRSC-594: Executing installation step 9 of 19: 'ConfigOLR'.

2021/07/14 00:03:52 CLSRSC-594: Executing installation step 10 of 19: 'ConfigCHMOS'.

2021/07/14 00:03:52 CLSRSC-594: Executing installation step 11 of 19: 'CreateOHASD'.

2021/07/14 00:03:55 CLSRSC-594: Executing installation step 12 of 19: 'ConfigOHASD'.

2021/07/14 00:03:55 CLSRSC-330: Adding Clusterware entries to file 'oracle-ohasd.service'

2021/07/14 00:04:09 CLSRSC-4002: Successfully installed Oracle Trace File Analyzer (TFA) Collector.

2021/07/14 00:04:20 CLSRSC-594: Executing installation step 13 of 19: 'InstallAFD'.

2021/07/14 00:04:23 CLSRSC-594: Executing installation step 14 of 19: 'InstallACFS'.

2021/07/14 00:04:25 CLSRSC-594: Executing installation step 15 of 19: 'InstallKA'.

2021/07/14 00:04:27 CLSRSC-594: Executing installation step 16 of 19: 'InitConfig'.

2021/07/14 00:04:38 CLSRSC-594: Executing installation step 17 of 19: 'StartCluster'.

2021/07/14 00:05:31 CLSRSC-343: Successfully started Oracle Clusterware stack

2021/07/14 00:05:31 CLSRSC-594: Executing installation step 18 of 19: 'ConfigNode'.

2021/07/14 00:05:59 CLSRSC-594: Executing installation step 19 of 19: 'PostConfig'.

2021/07/14 00:06:07 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded

好了 、节点一和节点二都执行完成并成功之后、我们来验证一下grid集群的状态。

grid@oracle19crac01[+ASM1]/u01/app$crsctl stat res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.LISTENER.lsnr

ONLINE ONLINE oracle19crac01 STABLE

ONLINE ONLINE oracle19crac02 STABLE

ora.chad

ONLINE ONLINE oracle19crac01 STABLE

ONLINE ONLINE oracle19crac02 STABLE

ora.net1.network

ONLINE ONLINE oracle19crac01 STABLE

ONLINE ONLINE oracle19crac02 STABLE

ora.ons

ONLINE ONLINE oracle19crac01 STABLE

ONLINE ONLINE oracle19crac02 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr(ora.asmgroup)

1 ONLINE ONLINE oracle19crac01 STABLE

2 ONLINE ONLINE oracle19crac02 STABLE

3 OFFLINE OFFLINE STABLE

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE oracle19crac01 STABLE

ora.SYSDG.dg(ora.asmgroup)

1 ONLINE ONLINE oracle19crac01 STABLE

2 ONLINE ONLINE oracle19crac02 STABLE

3 OFFLINE OFFLINE STABLE

ora.asm(ora.asmgroup)

1 ONLINE ONLINE oracle19crac01 Started,STABLE

2 ONLINE ONLINE oracle19crac02 Started,STABLE

3 OFFLINE OFFLINE STABLE

ora.asmnet1.asmnetwork(ora.asmgroup)

1 ONLINE ONLINE oracle19crac01 STABLE

2 ONLINE ONLINE oracle19crac02 STABLE

3 OFFLINE OFFLINE STABLE

ora.cvu

1 ONLINE ONLINE oracle19crac01 STABLE

ora.oracle19crac01.vip

1 ONLINE ONLINE oracle19crac01 STABLE

ora.oracle19crac02.vip

1 ONLINE ONLINE oracle19crac02 STABLE

ora.qosmserver

1 ONLINE ONLINE oracle19crac01 STABLE

ora.scan1.vip

1 ONLINE ONLINE oracle19crac01 STABLE

--------------------------------------------------------------------------------

grid@oracle19crac01[+ASM1]/u01/app$

5.3、创建datadg和fradg磁盘组

Grid集群安装成功之后我们需要来创建datadg和fradg磁盘组(根据实际磁盘数量来操作):

grid@oracle19crac01[+ASM1]/u01/app$sqlplus / as sysasm

SQL*Plus: Release 19.0.0.0.0 - Production on Wed Jul 14 00:09:24 2021

Version 19.3.0.0.0

Copyright (c) 1982, 2019, Oracle. All rights reserved.

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.3.0.0.0

SQL> create diskgroup fradg external redundancy disk '/dev/asmdisks/asmdisk0e','/dev/asmdisks/asmdisk0f' ATTRIBUTE 'compatible.rdbms' = '19.0', 'compatible.asm' = '19.0';

Diskgroup created.

SQL> create diskgroup datadg external redundancy disk '/dev/asmdisks/asmdisk0g','/dev/asmdisks/asmdisk0h' ATTRIBUTE 'compatible.rdbms' = '19.0', 'compatible.asm' = '19.0';

Diskgroup created.

SQL> exit

Disconnected from Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.3.0.0.0

grid@oracle19crac01[+ASM1]/u01/app$srvctl start diskgroup -diskgroup datadg

grid@oracle19crac01[+ASM1]/u01/app$srvctl start diskgroup -diskgroup fradg

grid@oracle19crac01[+ASM1]/u01/app$asmcmd

ASMCMD> lsdg

State Type Rebal Sector Logical_Sector Block AU Total_MB Free_MB Req_mir_free_MB Usable_file_MB Offline_disks Voting_files Name

MOUNTED EXTERN N 512 512 4096 1048576 102398 102297 0 102297 0 N DATADG/

MOUNTED EXTERN N 512 512 4096 1048576 20478 20377 0 20377 0 N FRADG/

MOUNTED NORMAL N 512 512 4096 4194304 15348 14432 5116 4658 0 Y SYSDG/

ASMCMD>

从上面我们可以看到、磁盘组已经成功创建。我们来验证一下Grid的所有信息:

# 检查本地节点的CRS状态

grid@oracle19crac01[+ASM1]/u01/app/19.0.0/grid/bin$crsctl check crs

CRS-4638: Oracle High Availability Services is online

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

# 检查集群的CRS状态

grid@oracle19crac01[+ASM1]/u01/app/19.0.0/grid/bin$crsctl check cluster

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

# 检查集群中节点的配置信息

grid@oracle19crac01[+ASM1]/u01/app/19.0.0/grid/bin$olsnodes -n -i -s

oracle19crac01 1 <none> Active

oracle19crac02 2 <none> Active

# 查看集群的表决磁盘信息

grid@oracle19crac01[+ASM1]/u01/app/19.0.0/grid/bin$crsctl query css votedisk

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE b59ce18f93a64fdcbf425a59bb84e8f6 (/dev/asmdisks/asmdisk0c) [SYSDG]

2. ONLINE e4afa6480cbd4fc3bf31499b32a87a89 (/dev/asmdisks/asmdisk0d) [SYSDG]

3. ONLINE 386c716da04f4f66bfc4ca43f4e95dd0 (/dev/asmdisks/asmdisk0b) [SYSDG]

Located 3 voting disk(s).

# 查看集群Scan VIP信息

grid@oracle19crac01[+ASM1]/u01/app/19.0.0/grid/bin$srvctl config scan

SCAN name: oracle19crac-scan, Network: 1

Subnet IPv4: 172.16.200.0/255.255.255.0/ens160, static

Subnet IPv6:

SCAN 1 IPv4 VIP: 172.16.200.25

SCAN VIP is enabled.

grid@oracle19crac01[+ASM1]/u01/app/19.0.0/grid/bin$srvctl config scan_listener

SCAN Listeners for network 1: