前面我们在 kubernetes 集群中部署了 Flannel 网络插件。今天我们一起来看看如何在 Kubernetes 集群中部署Calico网络插件。kubernetes 要求集群内各节点(包括 master 节点)能通过 Pod 网段互联互通。 Calico 使用 IPIP 或 BGP 技术(默认为 IPIP)为各节点创建一个可以互通的 Pod 网络。

安装Calico

这里我们采用 Yaml 资源文件的方式部署Calico 。我们可以在下面的文档链接中来选择我们的网络插件为 Calico:

我们先去上面的链接 https://docs.projectcalico.org/manifests/calico.yaml 下载我们需要的Calico资源文件。然后我们需要对Yaml文件进行修改:

[root@kubernetes-01 work]# cp calico.yaml calico.yaml.orig

[root@kubernetes-01 work]# vi calico.yaml

[root@kubernetes-01 work]# diff calico.yaml calico.yaml.orig

672,675c672,673

< - name: CALICO_IPV4POOL_CIDR

< value: "172.30.0.0/16"

< - name: IP_AUTODETECTION_METHOD # DaemonSet中添加该环境变量

< value: interface=ens160 # 指定内网网卡

---

> # - name: CALICO_IPV4POOL_CIDR

> # value: "192.168.0.0/16"

[root@kubernetes-01 work]#

- 将 Pod 网段地址修改为 172.30.0.0/16;

- calico 自动探查互联网卡,如果有多快网卡,则可以配置用于互联的网络接口命名正则表达式,如上面的 ens160 (根据自己服务器的网络接口名修改);

然后我们直接创建Calico资源,Calico会以 Daemonset 方式运行在所有的 K8S 节点上。

查看运行状态

[root@kubernetes-01 work]# ^C

[root@kubernetes-01 work]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-bc44d789c-2k6ww 1/1 Running 8 2d13h 172.30.31.92 kubernetes-01 <none> <none>

calico-node-cg7lk 1/1 Running 4 2d13h 172.16.200.11 kubernetes-01 <none> <none>

calico-node-nzfqs 1/1 Running 4 2d13h 172.16.200.13 kubernetes-03 <none> <none>

calico-node-r5jg2 1/1 Running 4 2d13h 172.16.200.12 kubernetes-02 <none> <none>

coredns-59c6ddbf5d-95frh 1/1 Running 4 47h 172.30.11.49 kubernetes-03 <none> <none>

[root@kubernetes-01 work]#

[root@kubernetes-01 work]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:8b:40:6f brd ff:ff:ff:ff:ff:ff

inet 172.16.200.11/24 brd 172.16.200.255 scope global noprefixroute ens160

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:91:a9:db:a3 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

4: dummy0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether ce:6f:a5:0d:8c:d9 brd ff:ff:ff:ff:ff:ff

5: kube-ipvs0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default

link/ether b6:e5:95:da:34:91 brd ff:ff:ff:ff:ff:ff

inet 10.254.0.2/32 brd 10.254.0.2 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.254.48.210/32 brd 10.254.48.210 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.254.187.158/32 brd 10.254.187.158 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.254.115.70/32 brd 10.254.115.70 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.254.155.245/32 brd 10.254.155.245 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.254.209.54/32 brd 10.254.209.54 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.254.0.1/32 brd 10.254.0.1 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.254.251.18/32 brd 10.254.251.18 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.254.92.78/32 brd 10.254.92.78 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.254.177.32/32 brd 10.254.177.32 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.254.149.232/32 brd 10.254.149.232 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.254.121.247/32 brd 10.254.121.247 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.254.194.245/32 brd 10.254.194.245 scope global kube-ipvs0

valid_lft forever preferred_lft forever

6: cali8ddf25b8472@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1440 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 0

7: cali24d9144d150@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1440 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 1

8: calib77e8e477e5@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1440 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 2

10: cali0f66ba8ac54@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1440 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 4

12: calif01a50e9588@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1440 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 6

13: califb1771331fe@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1440 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 7

14: calif6fc7f577a6@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1440 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 8

17: tunl0@NONE: <NOARP,UP,LOWER_UP> mtu 1440 qdisc noqueue state UNKNOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

inet 172.30.31.64/32 brd 172.30.31.64 scope global tunl0

valid_lft forever preferred_lft forever

28: cali9f33d0cea48@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1440 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 9

29: cali465062d6ac8@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1440 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 5

30: calic89bacf45d8@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1440 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 10

[root@kubernetes-01 work]#

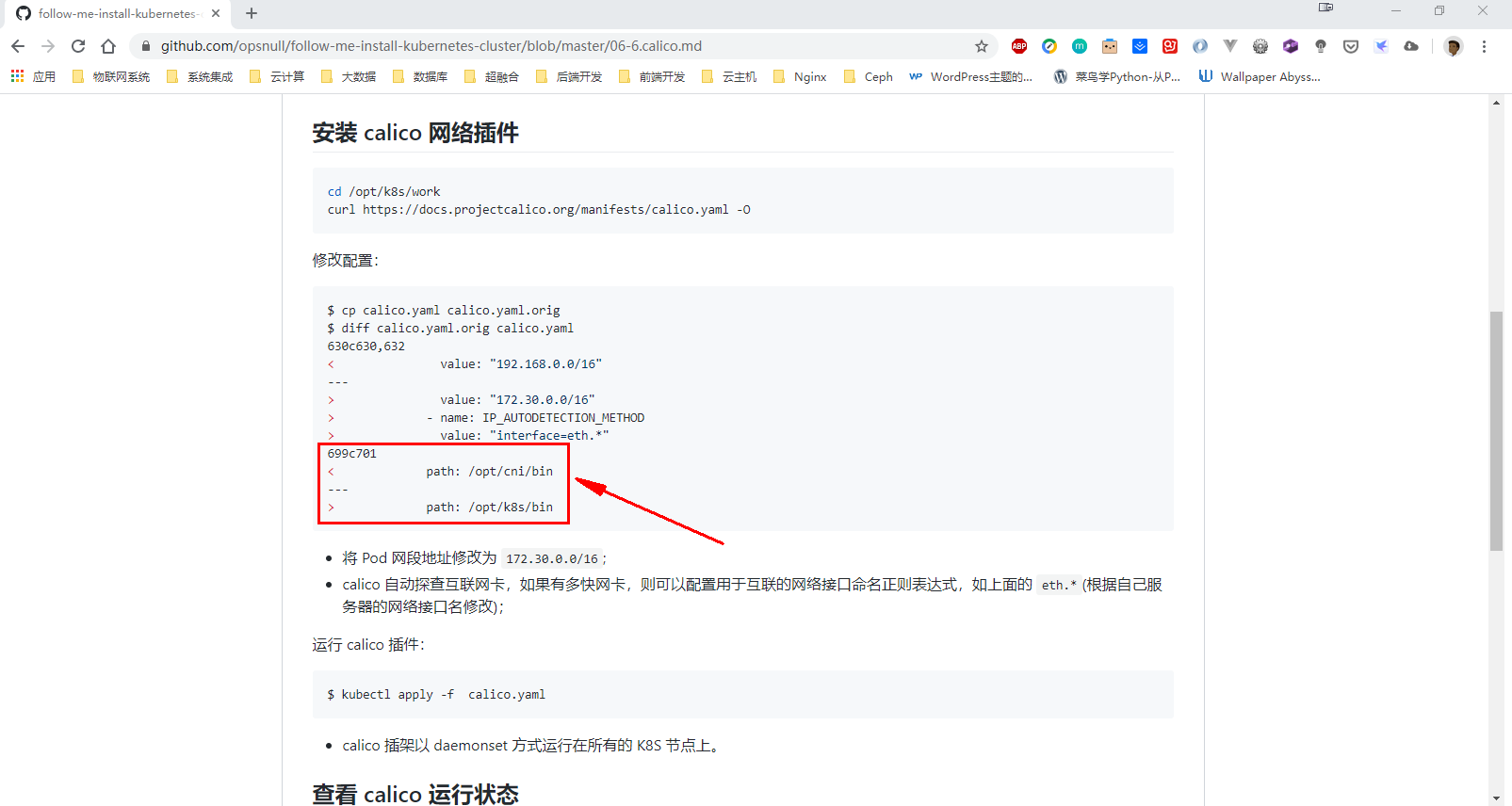

注:之前参考的 https://github.com/opsnull/follow-me-install-kubernetes-cluster/blob/master/06-6.calico.md 文档中有一个bug、下面这个目录不需要修改;因为 Kubelet 会定时汇报当前节点的状态给APIServer,以供调度的时候使用。而我们修改了目录之后 Calico 组件默认还是会部署到 /opt/cni/bin 目录下面会导致Calico启动失败、这样Kubelet就无法获取节点状态。

错误提示如下:Warning FailedScheduling

如果你修改了这个目录之后可以,你可以手动mkdir新建 /opt/cni/bin 目录,然后把 /opt/k8s/bin 的文件拷贝到 /opt/cni/bin 目录下面。稍等片刻、Calico就可以自动启动了。