文章目录

1、什么是Harbor?

Harbor 是一个CNCF基金会托管的开源的可信的云原生docker registry项目,可以用于存储、签名、扫描镜像内容,Harbor 通过添加一些常用的功能如安全性、身份权限管理等来扩展 docker registry 项目,此外还支持在 registry 之间复制镜像,还提供更加高级的安全功能,如用户管理、访问控制和活动审计等,在新版本中还添加了Helm仓库托管的支持。

Harbor最核心的功能就是给 docker registry 添加上一层权限保护的功能,要实现这个功能,就需要我们在使用 docker login、pull、push 等命令的时候进行拦截,先进行一些权限相关的校验,再进行操作,其实这一系列的操作 docker registry v2 就已经为我们提供了支持,v2 集成了一个安全认证的功能,将安全认证暴露给外部服务,让外部服务去实现。

Harbor 官方网站:https://goharbor.io/

Harbor Github地址:https://github.com/goharbor/harbor/releases

前面我们说了 docker registry v2 将安全认证暴露给了外部服务使用,那么是怎样暴露的呢?我们在命令行中输入docker login https://registry.qikqiak.com为例来为大家说明下认证流程:

1、docker client 接收到用户输入的 docker login 命令,将命令转化为调用 engine api 的 RegistryLogin 方法;

2、 在 RegistryLogin 方法中通过 http 盗用 registry 服务中的 auth 方法;

3、因为我们这里使用的是 v2 版本的服务,所以会调用 loginV2 方法,在 loginV2 方法中会进行 /v2/ 接口调用,该接口会对请求进行认证;

4、此时的请求中并没有包含 token 信息,认证会失败,返回 401 错误,同时会在 header 中返回去哪里请求认证的服务器地址;

5、registry client 端收到上面的返回结果后,便会去返回的认证服务器那里进行认证请求,向认证服务器发送的请求的 header 中包含有加密的用户名和密码;

6、 认证服务器从 header 中获取到加密的用户名和密码,这个时候就可以结合实际的认证系统进行认证了,比如从数据库中查询用户认证信息或者对接 ldap 服务进行认证校验;

7、认证成功后,会返回一个 token 信息,client 端会拿着返回的 token 再次向 registry 服务发送请求,这次需要带上得到的 token,请求验证成功,返回状态码就是200了;

8、docker client 端接收到返回的200状态码,说明操作成功,在控制台上打印Login Succeeded的信息

至此,整个登录过程完成。详细的原理和源码实现过程大家可以参考阳明大佬的文章:https://www.qikqiak.com/post/harbor-code-analysis/

2、Harbor的安装

Harbor 支持多种安装方式,源码目录下面默认有一个安装脚本(make/install.sh),采用 docker-compose 的形式可运行 Harbor 各个组件。这里我们将 Harbor 安装到 Kubernetes 集群中,如果同学们对 Harbor 的各个组件之间的运行关系非常熟悉,也可以自己手动编写资源清单文件进行部署。当然了如果上面的一些基本配置不能满足你的需求,你也可以做一些更高级的配置。我们可以在/harbor/make/目录下面找到所有的 Harbor 的配置模板,做相应的修改即可。不过我们这里给大家介绍另外一种简单的安装方法:Helm,Harbor 官方提供了对应的 Helm Chart 包,所以我们可以很容易安装。首先下载 Harbor Chart 包到要安装的集群上:

[root@kubernetes-01 ~]# git clone https://gitee.com/z0ukun/harbor-helm.git

Cloning into 'harbor-helm'...

remote: Enumerating objects: 4109, done.

remote: Counting objects: 100% (4109/4109), done.

remote: Compressing objects: 100% (1446/1446), done.

remote: Total 4109 (delta 2642), reused 4109 (delta 2642), pack-reused 0

Receiving objects: 100% (4109/4109), 15.44 MiB | 2.33 MiB/s, done.

Resolving deltas: 100% (2642/2642), done.

[root@kubernetes-01 ~]# cd harbor-helm/

[root@kubernetes-01 harbor-helm]# ls

cert Chart.yaml conf CONTRIBUTING.md docs LICENSE README.md templates test values.yaml

[root@kubernetes-01 harbor-helm]#

注:这里我们通过码云进行了加速下载;这里我们安装的最新版;如果小伙伴们想安装分支也可以通过git checkout命令进行切换。

2.1、Helm Chart包

安装 Helm Chart 包最重要的当然是values.yaml文件了,我们可以通过覆盖该文件中的属性来改变配置;下面是关于 Harbor Helmvalues.yaml文件的一些常用配置解析、小伙伴们可以看看:

expose:

# 配置服务暴露方式:ingress、clusterIP或nodePort多种类型

type: ingress

# 是否开启 tls

tls:

# 注:如果服务暴露方式是 ingress 并且tls被禁用,则在pull/push镜像时,则必须包含端口。详细查看文档:https://github.com/goharbor/harbor/issues/5291

enabled: true

certSource: auto

auto:

# common name 是用于生成证书的,当类型是 clusterIP 或者 nodePort 并且 secretName 为空的时候才需要

commonName: ""

secret:

# 如果你想使用自己的 TLS 证书和私钥,请填写这个 secret 的名称,这个 secret 必须包含名为 tls.crt 和 tls.key 的证书和私钥文件,如果没有设置则会自动生成证书和私钥文件

secretName: ""

# 默认 Notary 服务会使用上面相同的证书和私钥文件,如果你想用一个独立的则填充下面的字段,注意只有类型是 ingress 的时候才需要

notarySecretName: ""

ingress:

hosts:

core: core.harbor.domain

notary: notary.harbor.domain

controller: default

annotations:

ingress.kubernetes.io/ssl-redirect: "true"

ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/proxy-body-size: "0"

notary:

annotations: {}

harbor:

annotations: {}

# ClusterIP 的服务名称

clusterIP:

name: harbor

annotations: {}

ports:

httpPort: 80

httpsPort: 443

# Notary 服务监听端口,只有当 notary.enabled 设置为 true 的时候有效

notaryPort: 4443

# nodePort 的服务名称

nodePort:

name: harbor

ports:

http:

port: 80

nodePort: 30002

https:

port: 443

nodePort: 30003

notary:

port: 4443

nodePort: 30004

# loadBalancer 的服务名称

loadBalancer:

name: harbor

# 如果LoadBalancer支持IP分配,则需要配置IP

IP: ""

ports:

httpPort: 80

httpsPort: 443

notaryPort: 4443

annotations: {}

sourceRanges: []

# Harbor 核心服务外部访问 URL;主要用于:

# 1) 补全 portal 页面上面显示的 docker/helm 命令

# 2) 补全返回给 docker/notary 客户端的 token 服务 URL

# 格式:protocol://domain[:port]。

# 1) 如果 expose.type=ingress,"domain"的值就是 expose.ingress.hosts.core 的值

# 2) 如果 expose.type=clusterIP,"domain"的值就是 expose.clusterIP.name 的值

# 3) 如果 expose.type=nodePort,"domain"的值就是 k8s 节点的 IP 地址

# 如果在代理后面部署 Harbor,请将其设置为代理的 URL

externalURL: https://core.harbor.domain

internalTLS:

enabled: false

certSource: "auto"

trustCa: ""

core:

secretName: ""

crt: ""

key: ""

jobservice:

secretName: ""

crt: ""

key: ""

registry:

secretName: ""

crt: ""

key: ""

portal:

secretName: ""

crt: ""

key: ""

chartmuseum:

secretName: ""

crt: ""

key: ""

# trivy镜像扫描证书相关的配置

trivy:

secretName: ""

crt: ""

key: ""

# 默认情况下开启数据持久化,在k8s集群中需要动态的挂载卷默认需要一个StorageClass对象

# 如果你有已经存在可以使用的持久卷,需要在"storageClass"中指定你的 storageClass 或者设置 "existingClaim"

# 对于存储 docker 镜像和 Helm charts 包,你也可以用 "azure"、"gcs"、"s3"、"swift" 或者 "oss",直接在 "imageChartStorage" 区域设置即可

persistence:

enabled: true

# 设置成"keep"避免在执行 helm 删除操作期间移除 PVC,留空则在 chart 被删除后删除 PVC

resourcePolicy: "keep"

persistentVolumeClaim:

registry:

existingClaim: ""

# 指定"storageClass",或者使用默认的 StorageClass 对象,设置成"-"禁用动态分配挂载卷

storageClass: ""

subPath: ""

accessMode: ReadWriteOnce

size: 5Gi

chartmuseum:

existingClaim: ""

storageClass: ""

subPath: ""

accessMode: ReadWriteOnce

size: 5Gi

jobservice:

existingClaim: ""

storageClass: ""

subPath: ""

accessMode: ReadWriteOnce

size: 1Gi

# 如果使用外部的数据库服务,下面的设置将会被忽略

database:

existingClaim: ""

storageClass: ""

subPath: ""

accessMode: ReadWriteOnce

size: 1Gi

# 如果使用外部的 Redis 服务,下面的设置将会被忽略

redis:

existingClaim: ""

storageClass: ""

subPath: ""

accessMode: ReadWriteOnce

size: 1Gi

trivy:

existingClaim: ""

storageClass: ""

subPath: ""

accessMode: ReadWriteOnce

size: 5Gi

# 定义使用什么存储后端来存储镜像和 charts 包,详细文档地址:https://github.com/docker/distribution/blob/master/docs/configuration.md#storage

imageChartStorage:

# 正对镜像和chart存储是否禁用跳转,对于一些不支持的后端(例如对于使用minio的`s3`存储),需要禁用它。为了禁止跳转,只需要设置`disableredirect=true`即可,详细文档地址:https://github.com/docker/distribution/blob/master/docs/configuration.md#redirect

disableredirect: false

# 指定存储类型:"filesystem", "azure", "gcs", "s3", "swift", "oss",在相应的区域填上对应的信息。

# 如果你想使用 pv 则必须设置成"filesystem"类型

type: filesystem

filesystem:

rootdirectory: /storage

#maxthreads: 100

azure:

accountname: accountname

accountkey: base64encodedaccountkey

container: containername

#realm: core.windows.net

gcs:

bucket: bucketname

# The base64 encoded json file which contains the key

encodedkey: base64-encoded-json-key-file

#rootdirectory: /gcs/object/name/prefix

#chunksize: "5242880"

s3:

region: us-west-1

bucket: bucketname

#accesskey: awsaccesskey

#secretkey: awssecretkey

#regionendpoint: http://myobjects.local

#encrypt: false

#keyid: mykeyid

#secure: true

#skipverify: false

#v4auth: true

#chunksize: "5242880"

#rootdirectory: /s3/object/name/prefix

#storageclass: STANDARD

#multipartcopychunksize: "33554432"

#multipartcopymaxconcurrency: 100

#multipartcopythresholdsize: "33554432"

swift:

authurl: https://storage.myprovider.com/v3/auth

username: username

password: password

container: containername

#region: fr

#tenant: tenantname

#tenantid: tenantid

#domain: domainname

#domainid: domainid

#trustid: trustid

#insecureskipverify: false

#chunksize: 5M

#prefix:

#secretkey: secretkey

#accesskey: accesskey

#authversion: 3

#endpointtype: public

#tempurlcontainerkey: false

#tempurlmethods:

oss:

accesskeyid: accesskeyid

accesskeysecret: accesskeysecret

region: regionname

bucket: bucketname

#endpoint: endpoint

#internal: false

#encrypt: false

#secure: true

#chunksize: 10M

#rootdirectory: rootdirectory

imagePullPolicy: IfNotPresent

imagePullSecrets:

# - name: docker-registry-secret

# - name: internal-registry-secret

# The update strategy for deployments with persistent volumes(jobservice, registry

# and chartmuseum): "RollingUpdate" or "Recreate"

# Set it as "Recreate" when "RWM" for volumes isn't supported

updateStrategy:

type: RollingUpdate

logLevel: info

# Harbor admin 初始密码,Harbor 启动后通过 Portal 修改该密码

harborAdminPassword: "Harbor12345"

caSecretName: ""

# 用于加密的一个 secret key,必须是一个16位的字符串

secretKey: "not-a-secure-key"

proxy:

httpProxy:

httpsProxy:

noProxy: 127.0.0.1,localhost,.local,.internal

components:

- core

- jobservice

- trivy

# If expose the service via "ingress", the Nginx will not be used

# 如果你通过"ingress"保留服务,则下面的Nginx不会被使用

nginx:

image:

repository: goharbor/nginx-photon

tag: dev

serviceAccountName: ""

automountServiceAccountToken: false

replicas: 1

# resources:

# requests:

# memory: 256Mi

# cpu: 100m

nodeSelector: {}

tolerations: []

affinity: {}

# 额外的 Deployment 的一些 annotations

podAnnotations: {}

priorityClassName:

portal:

image:

repository: goharbor/harbor-portal

tag: dev

serviceAccountName: ""

automountServiceAccountToken: false

replicas: 1

# resources:

# requests:

# memory: 256Mi

# cpu: 100m

nodeSelector: {}

tolerations: []

affinity: {}

podAnnotations: {}

priorityClassName:

core:

image:

repository: goharbor/harbor-core

tag: dev

serviceAccountName: ""

automountServiceAccountToken: false

replicas: 1

startupProbe:

enabled: true

initialDelaySeconds: 10

# resources:

# requests:

# memory: 256Mi

# cpu: 100m

nodeSelector: {}

tolerations: []

affinity: {}

podAnnotations: {}

secret: ""

secretName: ""

xsrfKey: ""

priorityClassName:

jobservice:

image:

repository: goharbor/harbor-jobservice

tag: dev

replicas: 1

serviceAccountName: ""

automountServiceAccountToken: false

maxJobWorkers: 10

# jobs 的日志收集器:"file", "database" or "stdout"

jobLoggers:

- file

# - database

# - stdout

# resources:

# requests:

# memory: 256Mi

# cpu: 100m

nodeSelector: {}

tolerations: []

affinity: {}

podAnnotations: {}

secret: ""

priorityClassName:

registry:

serviceAccountName: ""

automountServiceAccountToken: false

registry:

image:

repository: goharbor/registry-photon

tag: dev

# resources:

# requests:

# memory: 256Mi

# cpu: 100m

controller:

image:

repository: goharbor/harbor-registryctl

tag: dev

# resources:

# requests:

# memory: 256Mi

# cpu: 100m

replicas: 1

nodeSelector: {}

tolerations: []

affinity: {}

podAnnotations: {}

priorityClassName:

secret: ""

relativeurls: false

credentials:

username: "harbor_registry_user"

password: "harbor_registry_password"

# e.g. "htpasswd -nbBC10 $username $password"

htpasswd: "harbor_registry_user:$2y$10$9L4Tc0DJbFFMB6RdSCunrOpTHdwhid4ktBJmLD00bYgqkkGOvll3m"

middleware:

enabled: false

type: cloudFront

cloudFront:

baseurl: example.cloudfront.net

keypairid: KEYPAIRID

duration: 3000s

ipfilteredby: none

privateKeySecret: "my-secret"

chartmuseum:

enabled: true

serviceAccountName: ""

automountServiceAccountToken: false

absoluteUrl: false

image:

repository: goharbor/chartmuseum-photon

tag: dev

replicas: 1

# resources:

# requests:

# memory: 256Mi

# cpu: 100m

nodeSelector: {}

tolerations: []

affinity: {}

podAnnotations: {}

priorityClassName:

trivy:

enabled: true

image:

repository: goharbor/trivy-adapter-photon

tag: dev

serviceAccountName: ""

automountServiceAccountToken: false

replicas: 1

debugMode: false

vulnType: "os,library"

severity: "UNKNOWN,LOW,MEDIUM,HIGH,CRITICAL"

ignoreUnfixed: false

insecure: false

gitHubToken: ""

skipUpdate: false

resources:

requests:

cpu: 200m

memory: 512Mi

limits:

cpu: 1

memory: 1Gi

nodeSelector: {}

tolerations: []

affinity: {}

podAnnotations: {}

priorityClassName:

notary:

enabled: true

server:

serviceAccountName: ""

automountServiceAccountToken: false

image:

repository: goharbor/notary-server-photon

tag: dev

replicas: 1

# resources:

# requests:

# memory: 256Mi

# cpu: 100m

nodeSelector: {}

tolerations: []

affinity: {}

podAnnotations: {}

priorityClassName:

signer:

serviceAccountName: ""

automountServiceAccountToken: false

image:

repository: goharbor/notary-signer-photon

tag: dev

replicas: 1

# resources:

# requests:

# memory: 256Mi

# cpu: 100m

nodeSelector: {}

tolerations: []

affinity: {}

podAnnotations: {}

priorityClassName:

secretName: ""

database:

# 如果使用外部的数据库,则设置 type=external,然后填写 external 区域的一些连接信息

type: internal

internal:

serviceAccountName: ""

automountServiceAccountToken: false

image:

repository: goharbor/harbor-db

tag: dev

# 内部的数据库的初始化超级用户的密码

password: "changeit"

shmSizeLimit: 512Mi

# resources:

# requests:

# memory: 256Mi

# cpu: 100m

nodeSelector: {}

tolerations: []

affinity: {}

priorityClassName:

initContainer:

migrator: {}

# resources:

# requests:

# memory: 128Mi

# cpu: 100m

permissions: {}

# resources:

# requests:

# memory: 128Mi

# cpu: 100m

external:

host: "192.168.0.1"

port: "5432"

username: "user"

password: "password"

coreDatabase: "registry"

notaryServerDatabase: "notary_server"

notarySignerDatabase: "notary_signer"

sslmode: "disable"

maxIdleConns: 100

maxOpenConns: 900

podAnnotations: {}

redis:

# 如果使用外部的 Redis 服务,设置 type=external,然后补充 external 部分的连接信息。

type: internal

internal:

serviceAccountName: ""

automountServiceAccountToken: false

image:

repository: goharbor/redis-photon

tag: dev

# resources:

# requests:

# memory: 256Mi

# cpu: 100m

nodeSelector: {}

tolerations: []

affinity: {}

priorityClassName:

external:

addr: "192.168.0.2:6379"

sentinelMasterSet: ""

# coreDatabaseIndex 必须设置为0

coreDatabaseIndex: "0"

jobserviceDatabaseIndex: "1"

registryDatabaseIndex: "2"

chartmuseumDatabaseIndex: "3"

trivyAdapterIndex: "5"

password: ""

podAnnotations: {}

exporter:

replicas: 1

# resources:

# requests:

# memory: 256Mi

# cpu: 100m

podAnnotations: {}

serviceAccountName: ""

automountServiceAccountToken: false

image:

repository: goharbor/harbor-exporter

tag: dev

nodeSelector: {}

tolerations: []

affinity: {}

cacheDuration: 23

cacheCleanInterval: 14400

priorityClassName:

metrics:

enabled: false

core:

path: /metrics

port: 8001

registry:

path: /metrics

port: 8001

jobservice:

path: /metrics

port: 8001

exporter:

path: /metrics

port: 8001

serviceMonitor:

enabled: false

additionalLabels: {}

interval: ""

metricRelabelings: []

# - action: keep

# regex: 'kube_(daemonset|deployment|pod|namespace|node|statefulset).+'

# sourceLabels: [__name__]

# Relabel configs to apply to samples before ingestion.

relabelings: []

# - sourceLabels: [__meta_kubernetes_pod_node_name]

# separator: ;

# regex: ^(.*)$

# targetLabel: nodename

# replacement: $1

# action: replace

2.2、定义values配置文件

有了上面的配置说明,我们就可以根据自己的需求来覆盖上面的值,比如我们这里新建一个 harbor-values.yaml 的文件,文件内容如下:

[root@kubernetes01 harbor-helm]# cat harbor-z0ukun-values.yaml

# Ingress 网关入口配置

expose:

type: ingress

tls:

# 是否启用 https 协议,如果不想启用 HTTPS,则可以设置为 false

enabled: true

# 指定使用 sectet 挂载证书模式,且使用上面创建的 secret 资源(选配、如果选择挂载证书则需要手动创建证书)

certSource: secret

secret:

secretName: "register-z0ukun-tls"

notarySecretName: "register-z0ukun-tls"

ingress:

hosts:

# 配置 Harbor 的访问域名

core: registry.z0ukun.com

notary: notary.z0ukun.com

controller: default

annotations:

ingress.kubernetes.io/ssl-redirect: "true"

ingress.kubernetes.io/proxy-body-size: "0"

# 如果是 traefik ingress,则按下面配置

kubernetes.io/ingress.class: "traefik"

traefik.ingress.kubernetes.io/router.tls: 'true'

traefik.ingress.kubernetes.io/router.entrypoints: websecure

# 如果是 nginx ingress,则按下面配置(选配)

# nginx.ingress.kubernetes.io/ssl-redirect: "true"

# nginx.ingress.kubernetes.io/proxy-body-size: "0"

# 如果不想使用 Ingress 方式,则可以配置下面参数,配置为 NodePort

#clusterIP:

# name: harbor

# ports:

# httpPort: 80

# httpsPort: 443

# notaryPort: 4443

#nodePort:

# name: harbor

# ports:

# http:

# port: 80

# nodePort: 30011

# https:

# port: 443

# nodePort: 30012

# notary:

# port: 4443

# nodePort: 30013

# 如果Harbor部署在代理后,将其设置为代理的URL;这个值一般要和上面的 Ingress 配置的地址保存一致

externalURL: https://registry.z0ukun.com

# Harbor 各个组件的持久化配置,并设置各个组件 existingClaim 参数为上面创建的对应 PVC 名称

persistence:

enabled: true

# 存储保留策略,当PVC、PV删除后,是否保留存储数据

resourcePolicy: "keep"

persistentVolumeClaim:

registry:

existingClaim: ""

storageClass: "harbor-rook-ceph-block"

subPath: ""

accessMode: ReadWriteOnce

size: 5Gi

chartmuseum:

existingClaim: ""

storageClass: "harbor-rook-ceph-block"

subPath: ""

accessMode: ReadWriteOnce

size: 5Gi

jobservice:

existingClaim: ""

storageClass: "harbor-rook-ceph-block"

subPath: ""

accessMode: ReadWriteOnce

size: 1Gi

database:

existingClaim: ""

storageClass: "harbor-rook-ceph-block"

subPath: ""

accessMode: ReadWriteOnce

size: 1Gi

redis:

existingClaim: ""

storageClass: "harbor-rook-ceph-block"

subPath: ""

accessMode: ReadWriteOnce

size: 1Gi

trivy:

existingClaim: ""

storageClass: "harbor-rook-ceph-block"

subPath: ""

accessMode: ReadWriteOnce

size: 5Gi

# 默认用户名 admin 的密码配置(如果没有配置则默认密码为Harbor12345)

harborAdminPassword: "z0uKun123456"

# 设置日志级别

logLevel: info

[root@kubernetes01 harbor-helm]#

2.3、自定义证书挂载模式(选配)

如果我们选择指定使用 sectet 挂载证书模式、则需要手动创建证书来绑定使用、详细操作命令如下:

[root@kubernetes01 ca]# openssl req -newkey rsa:4096 -nodes -sha256 -keyout ca.key -x509 -days 3650 -out ca.crt

Generating a 4096 bit RSA private key

.........................................................................++

.......++

writing new private key to 'ca.key'

-----

You are about to be asked to enter information that will be incorporated

into your certificate request.

What you are about to enter is what is called a Distinguished Name or a DN.

There are quite a few fields but you can leave some blank

For some fields there will be a default value,

If you enter '.', the field will be left blank.

-----

Country Name (2 letter code) [XX]:

State or Province Name (full name) []:

Locality Name (eg, city) [Default City]:

Organization Name (eg, company) [Default Company Ltd]:

Organizational Unit Name (eg, section) []:

Common Name (eg, your name or your server's hostname) []:

Email Address []:

[root@kubernetes01 ca]#

[root@kubernetes01 ca]#

[root@kubernetes01 ca]#

[root@kubernetes01 ca]#

[root@kubernetes01 ca]# openssl req -newkey rsa:4096 -nodes -sha256 -keyout tls.key -out tls.csr

Generating a 4096 bit RSA private key

.........................

...++

...............................................................++

writing new private key to 'tls.key'

-----

You are about to be asked to enter information that will be incorporated

into your certificate request.

What you are about to enter is what is called a Distinguished Name or a DN.

There are quite a few fields but you can leave some blank

For some fields there will be a default value,

If you enter '.', the field will be left blank.

-----

Country Name (2 letter code) [XX]:State or Province Name (full name) []:

Locality Name (eg, city) [Default City]:

Organization Name (eg, company) [Default Company Ltd]:

Organizational Unit Name (eg, section) []:

Common Name (eg, your name or your server's hostname) []:

Email Address []:

Please enter the following 'extra' attributes

to be sent with your certificate request

A challenge password []:

An optional company name []:

[root@kubernetes01 ca]#

[root@kubernetes01 ca]#

[root@kubernetes01 ca]#

[root@kubernetes01 ca]#

[root@kubernetes01 ca]#

[root@kubernetes01 ca]# openssl x509 -req -days 3650 -in tls.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out tls.crt

Signature ok

subject=/C=XX/L=Default City/O=Default Company Ltd

Getting CA Private Key

[root@kubernetes01 ca]# kubectl create secret generic harbor-z0ukun-tls --from-file=tls.crt --from-file=tls.key --from-file=ca.crt -n harbor

secret/harbor-z0ukun-tls created

[root@kubernetes01 ca]# kubectl get secret -A

NAMESPACE NAME TYPE DATA AGE

harbor harbor-z0ukun-tls Opaque 3 11s

这里我没有使用 sectet 挂载证书模式、而是通过 Traefik Ingress 自动生成证书和 IngressRoute 信息。

2.4、创建 StorageClass

需要我们定制的部分很少,我们手动配置了数据持久化的部分;我们需要提前为上面的这些服务创建好可用的 PVC 或者 StorageClass 对象,比如我们这里使用一个名为 harbor-rook-ceph-block 的 StorageClass 资源对象,当然也可以根据我们实际的需求修改 accessMode 或者存储容量(harbor-data-storageclass.yaml):

apiVersion: ceph.rook.io/v1

kind: CephBlockPool

metadata:

name: harbor-replicapool

namespace: rook-ceph

spec:

failureDomain: host

replicated:

size: 3

requireSafeReplicaSize: true

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: harbor-rook-ceph-block

provisioner: rook-ceph.rbd.csi.ceph.com

parameters:

clusterID: rook-ceph

pool: harbor-replicapool

imageFormat: "2"

imageFeatures: layering

csi.storage.k8s.io/provisioner-secret-name: rook-csi-rbd-provisioner

csi.storage.k8s.io/provisioner-secret-namespace: rook-ceph

csi.storage.k8s.io/controller-expand-secret-name: rook-csi-rbd-provisioner

csi.storage.k8s.io/controller-expand-secret-namespace: rook-ceph

csi.storage.k8s.io/node-stage-secret-name: rook-csi-rbd-node

csi.storage.k8s.io/node-stage-secret-namespace: rook-ceph

csi.storage.k8s.io/fstype: xfs

allowVolumeExpansion: true

reclaimPolicy: Delete

我们先创建上面的CephBlockPool和StorageClass两个资源文件:

[root@kubernetes-01 harbor-helm]# kubectl apply -f harbor-data-storageclass.yaml

cephblockpool.ceph.rook.io/harbor-replicapool created

storageclass.storage.k8s.io/harbor-rook-ceph-block created

[root@kubernetes-01 harbor-helm]# kubectl get CephBlockPool -n rook-ceph

NAME AGE

harbor-replicapool 7h11m

replicapool 29h

[root@kubernetes-01 harbor-helm]#

[root@kubernetes-01 harbor-helm]# kubectl get storageclass -n harbor

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

harbor-rook-ceph-block rook-ceph.rbd.csi.ceph.com Delete Immediate true 7h5m

rook-ceph-block rook-ceph.rbd.csi.ceph.com Delete Immediate false 29h

[root@kubernetes-01 harbor-helm]#

2.5、安装Harbor

创建完成以后,使用前面我们自定义的values文件安装Harbor:

[root@kubernetes01 harbor-helm]# helm search repo harbor

NAME CHART VERSION APP VERSION DESCRIPTION

apphub/harbor 4.0.0 1.10.1 Harbor is an an open source trusted cloud nativ...

harbor/harbor 1.7.1 2.3.1 An open source trusted cloud native registry th...

[root@kubernetes01 harbor-helm]#

[root@kubernetes01 harbor-helm]# helm install harbor harbor/harbor --version 1.7.1 -f harbor-values.yaml -n harbor

NAME: harbor

LAST DEPLOYED: Thu Jul 29 21:44:39 2021

NAMESPACE: harbor

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Please wait for several minutes for Harbor deployment to complete.

Then you should be able to visit the Harbor portal at https://registry.z0ukun.com

For more details, please visit https://github.com/goharbor/harbor

[root@kubernetes01 harbor-helm]#

安装完成以后我们来验证一下 harbor 的相关信息创建完成并 running 正常:

[root@kubernetes01 harbor-helm]# kubectl get secret -n harbor

NAME TYPE DATA AGE

default-token-mvfzn kubernetes.io/service-account-token 3 16m

harbor-chartmuseum Opaque 1 13m

harbor-core Opaque 8 13m

harbor-database Opaque 1 13m

harbor-ingress kubernetes.io/tls 3 13m

harbor-jobservice Opaque 2 13m

harbor-notary-server Opaque 5 13m

harbor-registry Opaque 2 13m

harbor-registry-htpasswd Opaque 1 13m

harbor-trivy Opaque 2 13m

sh.helm.release.v1.harbor.v1 helm.sh/release.v1 1 13m

[root@kubernetes01 harbor-helm]# kubectl get pod -n harbor

NAME READY STATUS RESTARTS AGE

harbor-chartmuseum-9668d67f7-nntlf 1/1 Running 0 13m

harbor-core-57b48998b5-9cxjh 1/1 Running 0 13m

harbor-database-0 1/1 Running 0 13m

harbor-jobservice-5dcf78dc87-79fxd 1/1 Running 0 13m

harbor-notary-server-75c9588f76-2krb8 1/1 Running 1 13m

harbor-notary-signer-b4986db8d-bj4zl 1/1 Running 1 13m

harbor-portal-c55c48545-9qgnp 1/1 Running 0 13m

harbor-redis-0 1/1 Running 0 13m

harbor-registry-77b85984bc-4zltn 2/2 Running 0 13m

harbor-trivy-0 1/1 Running 0 13m

[root@kubernetes01 harbor-helm]# kubectl get pvc -n harbor

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

data-harbor-redis-0 Bound pvc-f2341a4a-7780-46eb-83ad-09e5efb8bbd5 1Gi RWO harbor-rook-ceph-block 13m

data-harbor-trivy-0 Bound pvc-510b78d2-ed21-4a50-96c3-8e3bc81ee888 5Gi RWO harbor-rook-ceph-block 13m

database-data-harbor-database-0 Bound pvc-e72cd985-e503-4e74-8960-62c36a8be648 1Gi RWO harbor-rook-ceph-block 13m

harbor-chartmuseum Bound pvc-10a7b303-027f-44dc-9b85-b4ea249bf91b 5Gi RWO harbor-rook-ceph-block 13m

harbor-jobservice Bound pvc-0184419f-aa5a-4b25-91d4-260e8293f362 1Gi RWO harbor-rook-ceph-block 13m

harbor-registry Bound pvc-c8bf9a72-3dc5-4aa4-ae5c-8c1dc48dc7ba 5Gi RWO harbor-rook-ceph-block 13m

[root@kubernetes01 harbor-helm]# kubectl get svc -n harbor

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

harbor-chartmuseum ClusterIP 10.254.209.202 <none> 80/TCP 13m

harbor-core ClusterIP 10.254.198.49 <none> 80/TCP 13m

harbor-database ClusterIP 10.254.197.38 <none> 5432/TCP 13m

harbor-jobservice ClusterIP 10.254.135.127 <none> 80/TCP 13m

harbor-notary-server ClusterIP 10.254.252.0 <none> 4443/TCP 13m

harbor-notary-signer ClusterIP 10.254.116.63 <none> 7899/TCP 13m

harbor-portal ClusterIP 10.254.118.20 <none> 80/TCP 13m

harbor-redis ClusterIP 10.254.71.102 <none> 6379/TCP 13m

harbor-registry ClusterIP 10.254.167.186 <none> 5000/TCP,8080/TCP 13m

harbor-trivy ClusterIP 10.254.246.126 <none> 8080/TCP 13m

[root@kubernetes01 harbor-helm]#

上面是我们通过 Helm 安装所有涉及到的一些资源对象,稍微等一会儿,就可以安装成功了。现在我们可以看到所有资源对象都是Running状态了,都成功运行起来了。

注:重点来了、如果在初次访问Harbor的时候提示:用户名或者密码不正确;先不要惊慌、首选看看你是不是通过TLS访问的Harbor、如果不是请使用TLS访问Harbor。然后再把 redis 服务重启或者删除该POD(redis删除之后k8s会自动重建)再登录试试、我这边是这么操作的。

3、访问Harbor

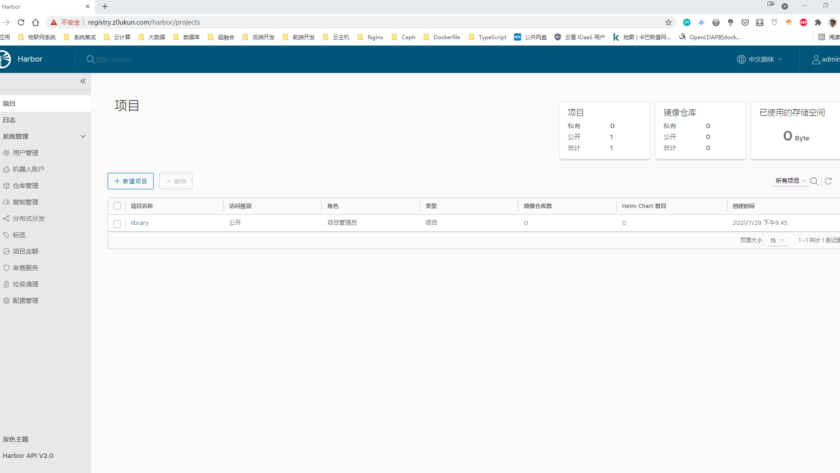

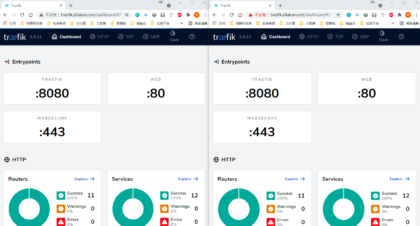

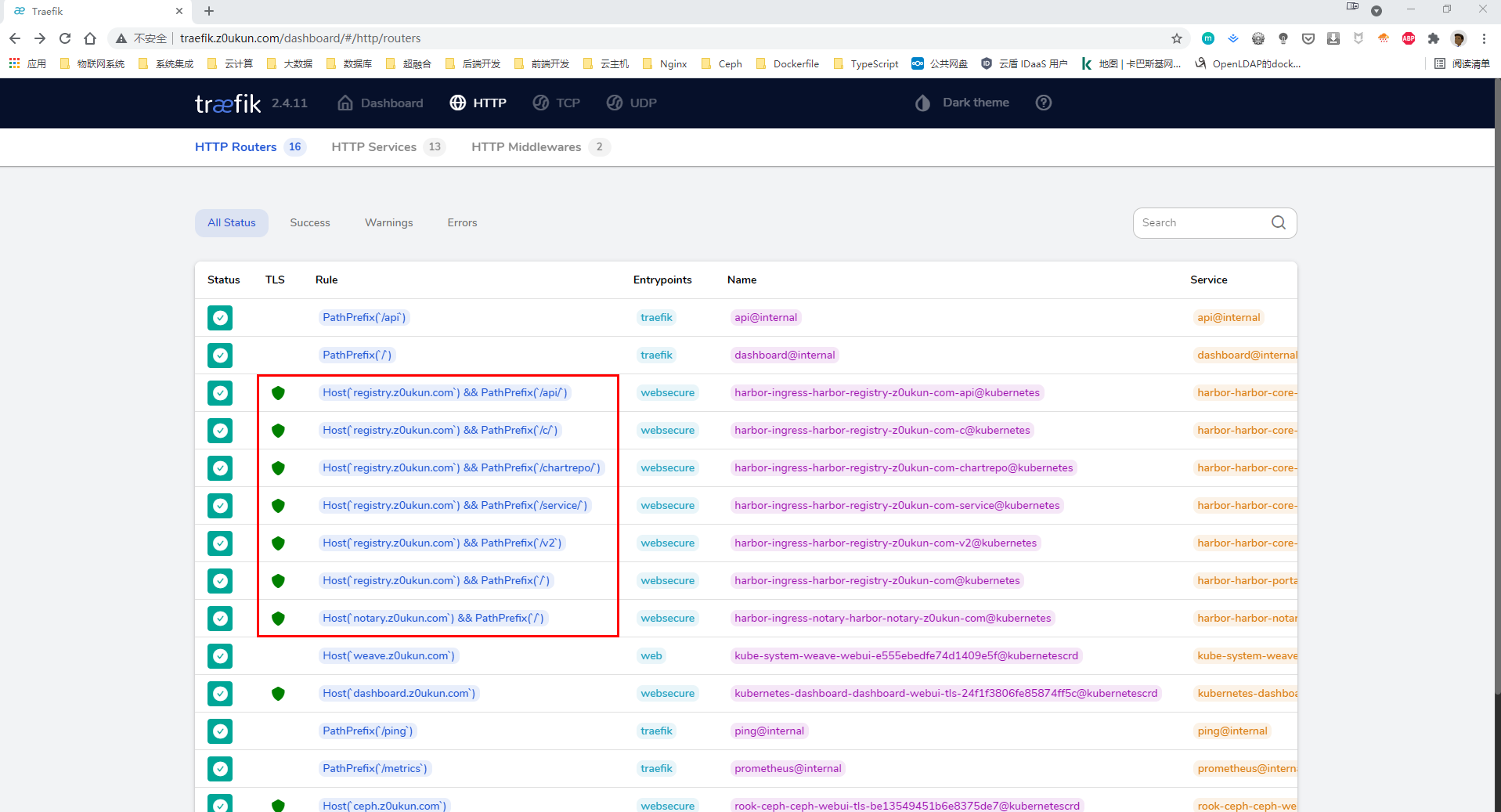

我们可以先到 Traefik 管理页面查看是否已经生成了 Harbor 的 Ingress 信息(或者通过命令行查看 kubectl get ingressroute -n harbor)、如果有我们就可以修改本机 hosts 文件添加IP域名对应关系、然后用 register.z0ukun.com 域名来访问 Harbor 啦。

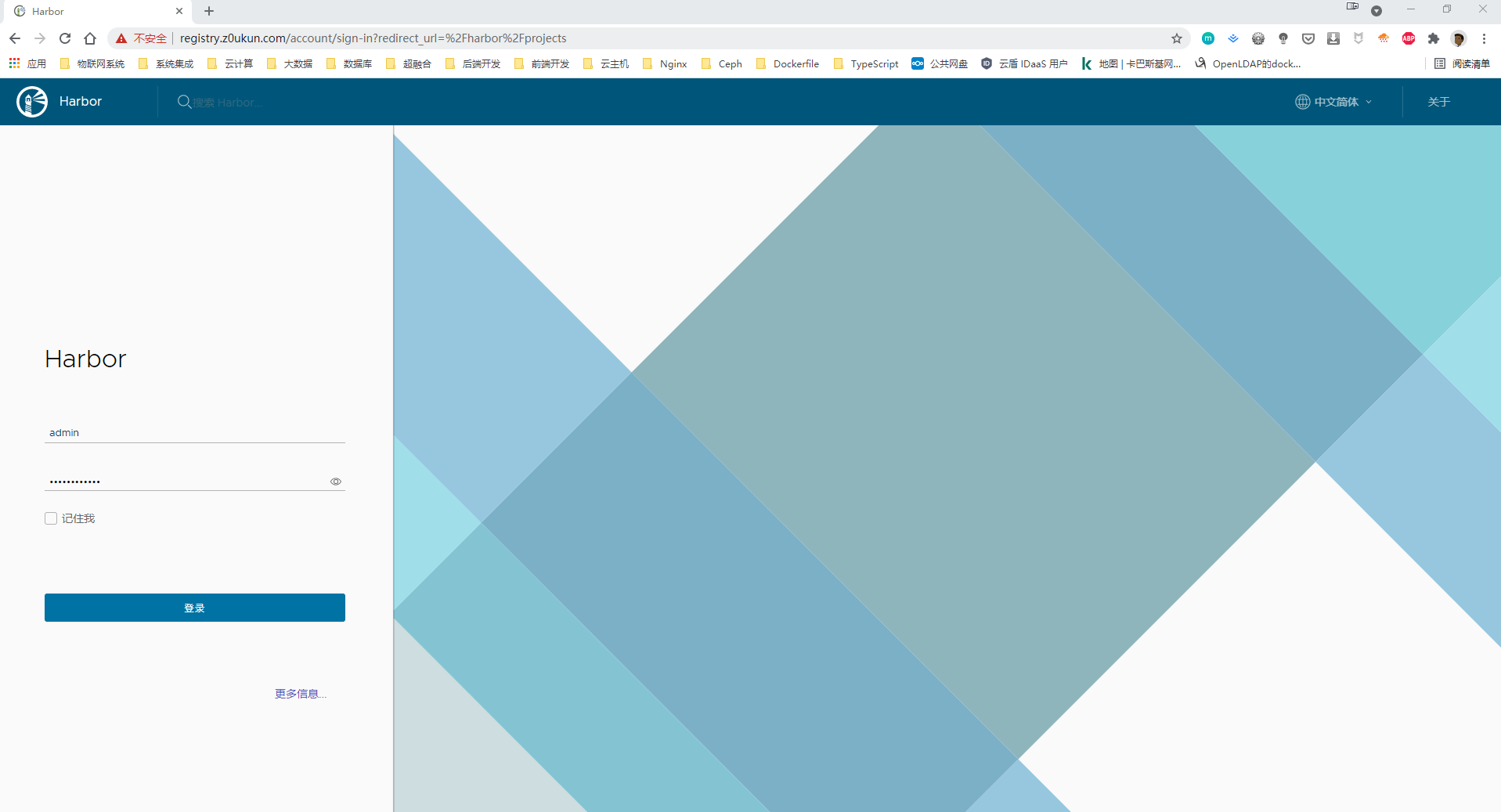

然后输入用户名:admin,密码:Harbor12345(当然我们这里的密码是 Helm 安装的时候自己覆盖 harborAdminPassword)即可登录进入 Portal 首页:

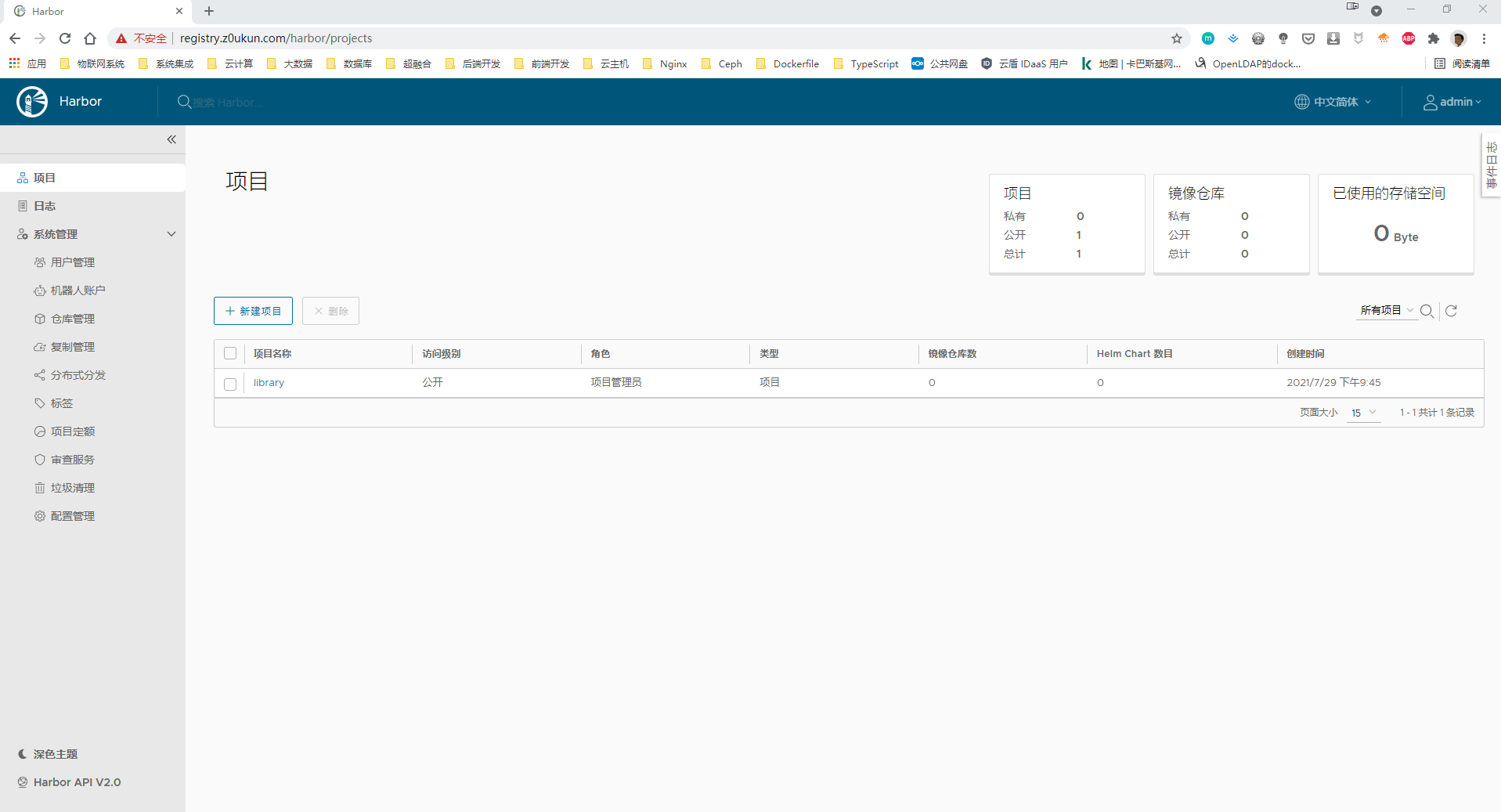

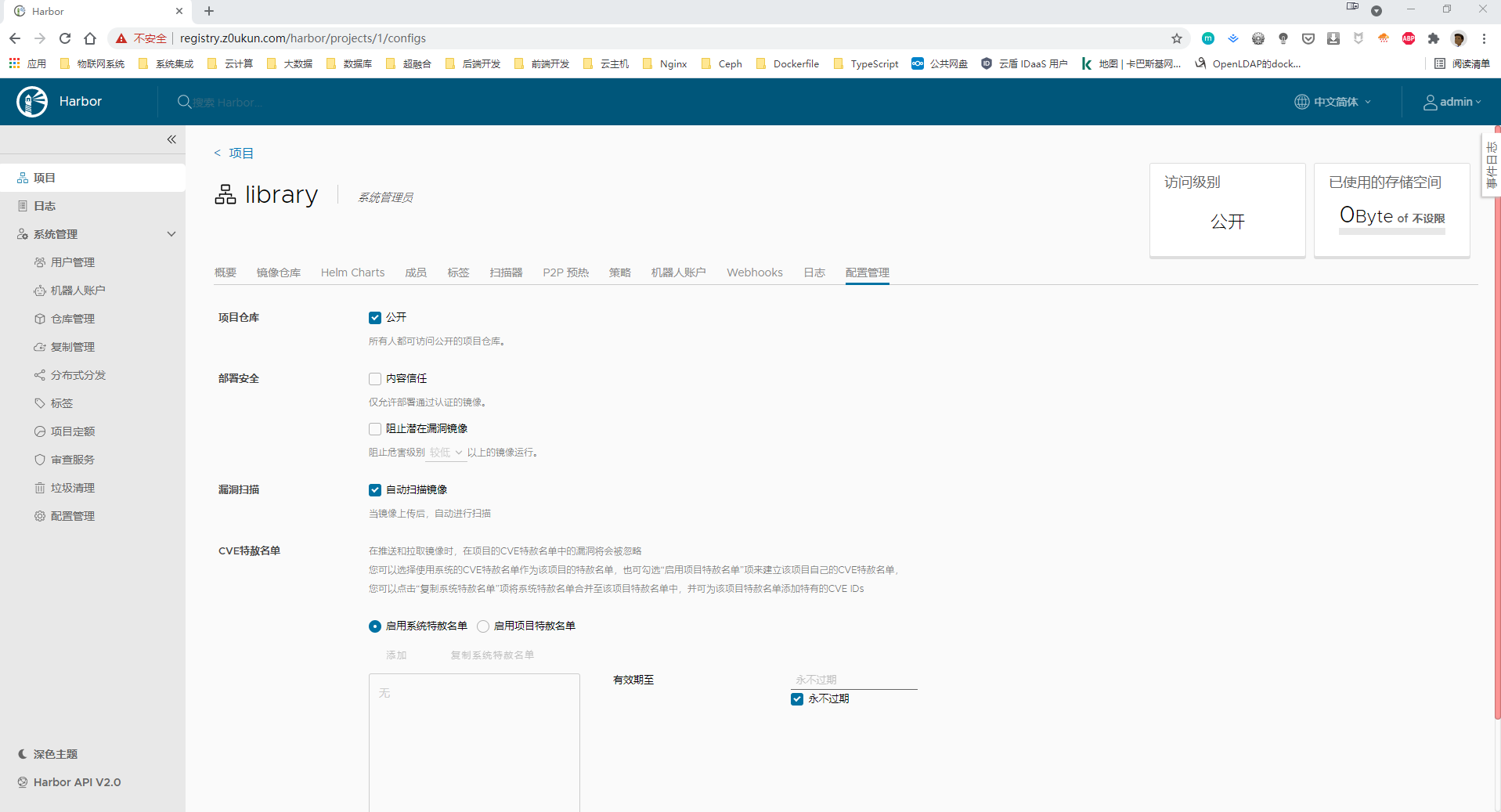

在 Harbor 首页我们可以看到有很多功能,默认情况下会有一个名叫library的项目,该项目默认是公开访问权限的;进入项目可以看到里面还有 Helm Chart 包的管理,我们可以手动在这里上传也可以对改项目里面的镜像进行一些配置,比如是否开启自动扫描镜像功能:

4、配置镜像仓库

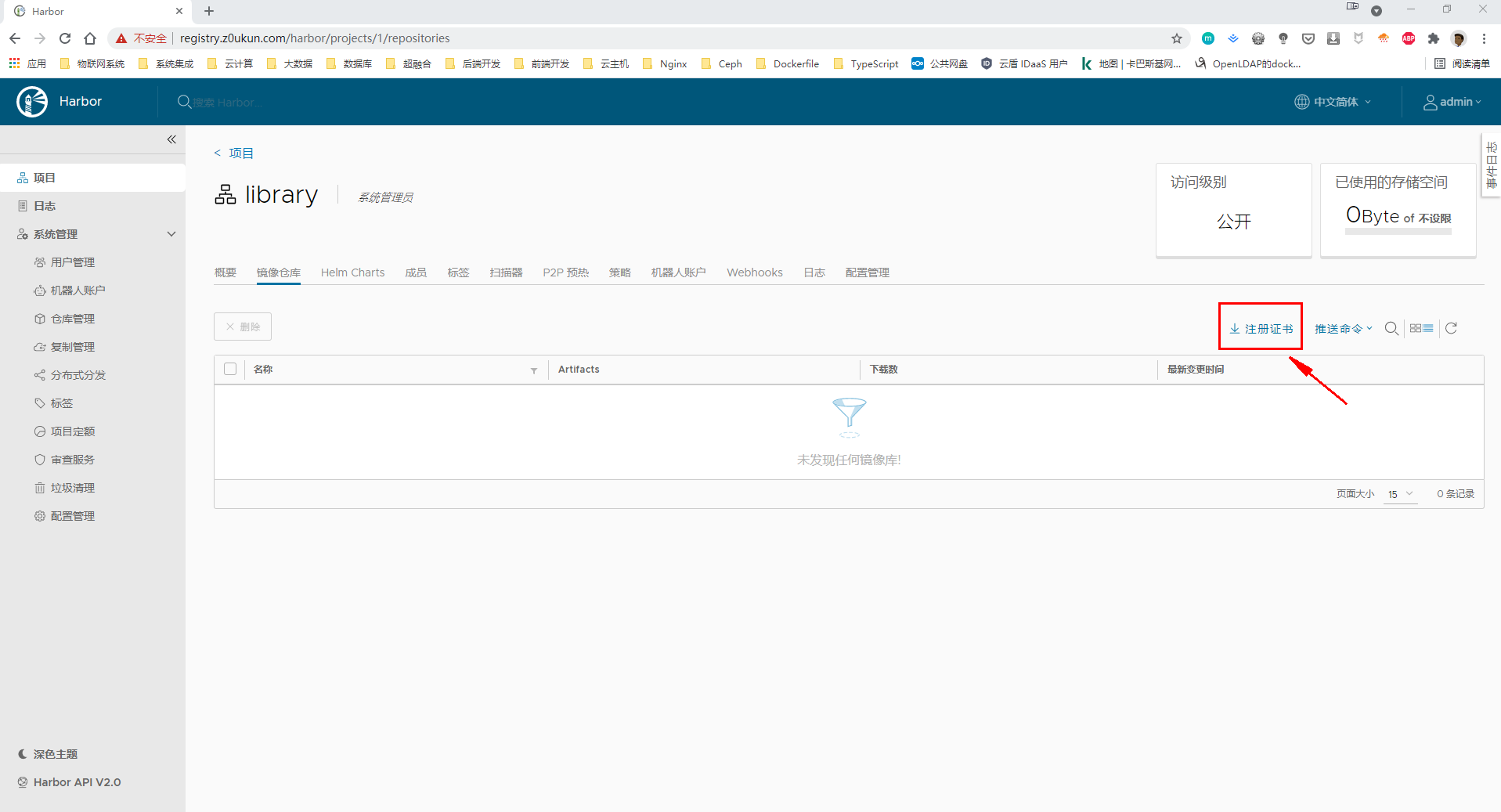

登录镜像仓库需要用到 https 证书 ca.crt 、这里我们可以去 Harbor 首页–项目–library 中点击注册证书进行下载;下载完成以后我们把证书内容复制到服务器指定目录中。

这里我们进入服务器,然后在服务器上 /etc/docker 目录下创建 certs.d 文件夹,然后在 certs.d 文件夹下创建 Harobr 域名文件夹,可以输入下面命令创建对应目录。在 /etc/docker/certs.d/registry.z0ukun.com 目录下创建上面的 ca 证书文件,内容如下:

[root@kubernetes01 ~]# mkdir -p /etc/docker/certs.d/registry.z0ukun.com/

[root@kubernetes01 ~]# cd /etc/docker/certs.d/registry.z0ukun.com/

[root@kubernetes01 registry.z0ukun.com]# cat ca.crt

-----BEGIN CERTIFICATE-----

MIIDEzCCAfugAwIBAgIQGbNEDw9kTrrweuqtpfRfLjANBgkqhkiG9w0BAQsFADAU

MRIwEAYDVQQDEwloYXJib3ItY2EwHhcNMjEwNzI5MTM0NDQzWhcNMjIwNzI5MTM0

NDQzWjAUMRIwEAYDVQQDEwloYXJib3ItY2EwggEiMA0GCSqGSIb3DQEBAQUAA4IB

DwAwggEKAoIBAQDCjm9eUHN8S+T1wxkN0Js0katvSpiCj/Pp2s9CKQB7FlikLk6i

GLzxxkGHJGxa7wa+9xj7c1qGttHkwGKJc7YY163i4DoH6+EhdxBDasEBuwDphh+j

SoKp+MmI8oACyPfShYYV2RiYV5AGE059a7ODc+TXLKNAT/vNG0f4g40EVhaT8SjD

KEzmwN6WbkyUQpy3QplqAtv5WX89tJy85tXH1iw/3e3rLebXxJFrrik5jRe7L7Uc

T6bG7hB2w6D9PX3Ne5OapgAdPFLezHhFbxDQF36bikx2/kWIZZ1Wr1lma/jwk+gp

iAKnzB5A7ryNH5LjAgwgWWlV90+JwfvX1EZXAgMBAAGjYTBfMA4GA1UdDwEB/wQE

AwICpDAdBgNVHSUEFjAUBggrBgEFBQcDAQYIKwYBBQUHAwIwDwYDVR0TAQH/BAUw

AwEB/zAdBgNVHQ4EFgQU2Qc33UVujdkSoyYuYVGt9/vF4+AwDQYJKoZIhvcNAQEL

BQADggEBAFsKe+5rJAzCZKI5WUBuW1U7F+tKmtBajM3X+xmMs3+5VCRqFEEK9PuI

RLF5sQbnhMv6jPvng0ujCDVp6UytV0srU+kgGr5ey5xWaiN4qAto3E50ojJzvrtg

GlRKTNyJg4xLh1jWC34ny0OaAQYm3AputpsaLHmQ2OhkP6VtuKlbtk5j9SoY6uR4

XSoh8cSGngE2VbBXB3q54nT+lY28ltVuUn7tIVJr+uziSeGIXATTJjJseUmSiUvF

xSvLGZCPtRB92Tr/3LvlCj26nstwOhUR3kU+aKOdHjuFtwPCh4/jBX+kkV2tjAEr

Wxga0Hg1D3QLRxKL77aL4cPK6/Ub260=

-----END CERTIFICATE-----

[root@kubernetes01 registry.z0ukun.com]#

[root@kubernetes01 ~]# docker login registry.z0ukun.com

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@kubernetes01 ~]#

配置成功之后我们就可以通过 docker login 命令来登录 Harbor 仓库了、只有登录成功后才能将镜像推送到镜像仓库。

5、功能测试

5.1、镜像push

我们在服务器上临时下载一个名为 busybox 的镜像文件,现在我们想把该镜像推送到我们的私有仓库中去,应该怎样操作呢?首先我们需要给该镜像重新打一个 registry.z0ukun.com 的 tag,然后在推送的时候就可以识别到推送到哪个镜像仓库;详细操作如下:

[root@kubernetes01 registry.z0ukun.com]# docker pull busybox

Using default tag: latest

latest: Pulling from library/busybox

b71f96345d44: Pull complete

Digest: sha256:0f354ec1728d9ff32edcd7d1b8bbdfc798277ad36120dc3dc683be44524c8b60

Status: Downloaded newer image for busybox:latest

docker.io/library/busybox:latest

[root@kubernetes01 registry.z0ukun.com]# docker images | grep busybox

busybox latest 69593048aa3a 7 weeks ago 1.24MB

[root@kubernetes01 registry.z0ukun.com]# docker tag busybox registry.z0ukun.com/library/busybox

[root@kubernetes01 registry.z0ukun.com]# docker images | grep busybox

busybox latest 69593048aa3a 7 weeks ago 1.24MB

registry.z0ukun.com/library/busybox latest 69593048aa3a 7 weeks ago 1.24MB

[root@kubernetes01 registry.z0ukun.com]# docker push registry.z0ukun.com/library/busybox

Using default tag: latest

The push refers to repository [registry.z0ukun.com/library/busybox]

5b8c72934dfc: Pushed

latest: digest: sha256:dca71257cd2e72840a21f0323234bb2e33fea6d949fa0f21c5102146f583486b size: 527

[root@kubernetes01 registry.z0ukun.com]#

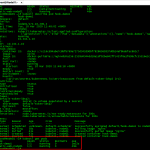

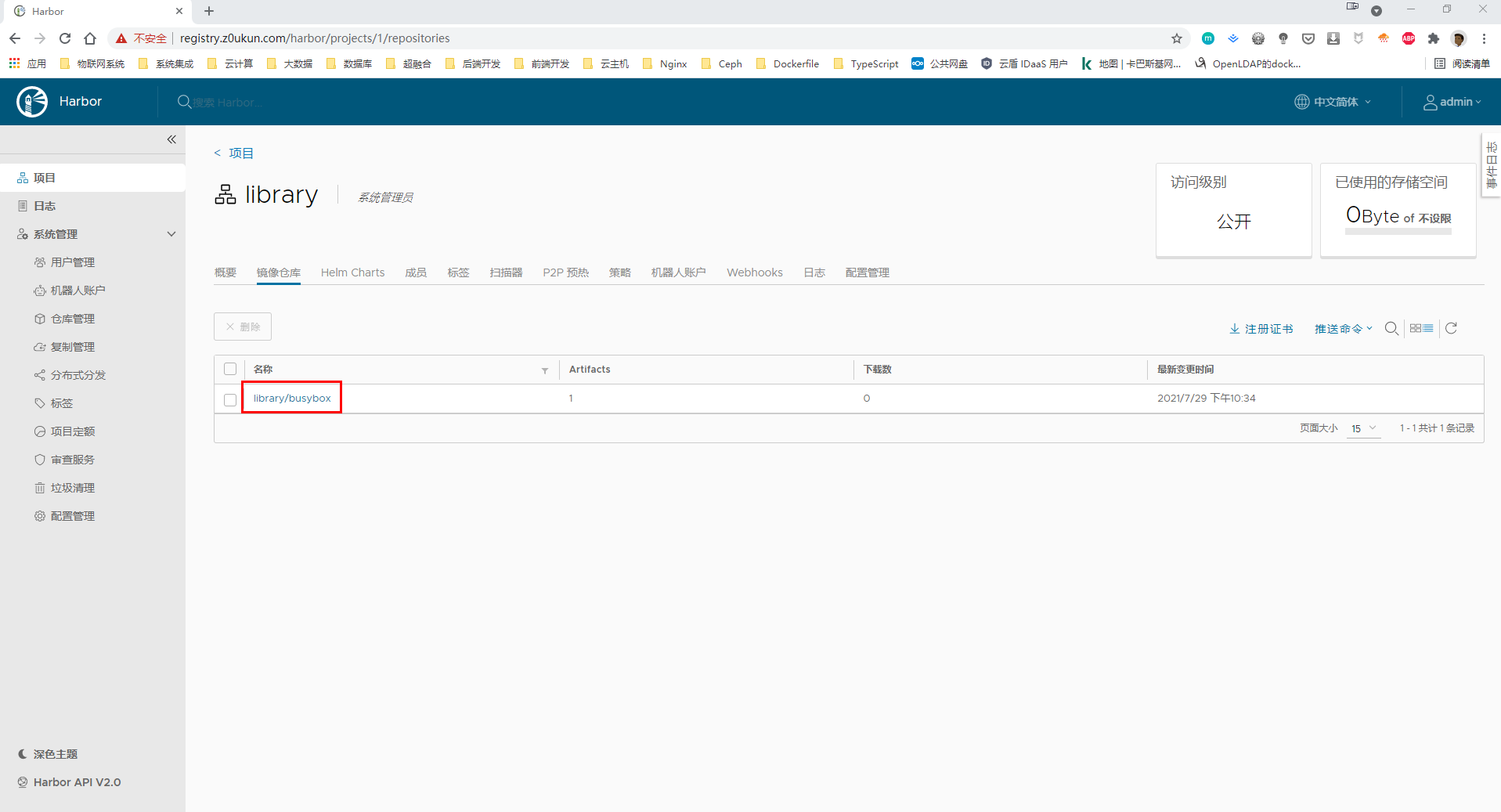

推送完成后,我们同样可以在 Portal 页面上看到这个镜像的信息:

5.2、镜像pull

镜像 push 成功,我们同样可以测试下镜像 pull;我们先将本机的 registry.z0ukun.com/library/busybox 删除掉、然后使用 docker pull 命令进行镜像拉取:

[root@kubernetes01 registry.z0ukun.com]# docker images | grep busybox

busybox latest 69593048aa3a 7 weeks ago 1.24MB

registry.z0ukun.com/library/busybox latest 69593048aa3a 7 weeks ago 1.24MB

[root@kubernetes01 registry.z0ukun.com]# docker rmi registry.z0ukun.com/library/busybox

Untagged: registry.z0ukun.com/library/busybox:latest

Untagged: registry.z0ukun.com/library/busybox@sha256:dca71257cd2e72840a21f0323234bb2e33fea6d949fa0f21c5102146f583486b

[root@kubernetes01 registry.z0ukun.com]# docker images | grep busybox

busybox latest 69593048aa3a 7 weeks ago 1.24MB

[root@kubernetes01 registry.z0ukun.com]# docker pull registry.z0ukun.com/library/busybox:latest

latest: Pulling from library/busybox

Digest: sha256:dca71257cd2e72840a21f0323234bb2e33fea6d949fa0f21c5102146f583486b

Status: Downloaded newer image for registry.z0ukun.com/library/busybox:latest

registry.z0ukun.com/library/busybox:latest

[root@kubernetes01 registry.z0ukun.com]# docker images | grep busybox

busybox latest 69593048aa3a 7 weeks ago 1.24MB

registry.z0ukun.com/library/busybox latest 69593048aa3a 7 weeks ago 1.24MB

[root@kubernetes01 registry.z0ukun.com]#

从上面的内容我们可以看到、我们的私有 docker 仓库搭建成功了;大家可以尝试去创建一个私有的项目并创建一个新的用户,然后使用这个用户来进行 pull/push 镜像。当然、Harbor 还具有其他的一些功能,比如镜像复制,扫描器,P2P预热等内容。小伙伴们可以自行体验、这里就不再详细描述了。